Physician-GPT: Using Retrieval-Augmented Generation to Assist Physicians

Introduction

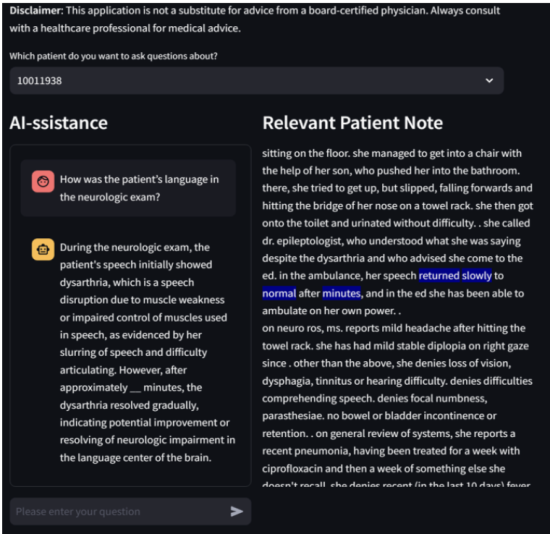

Physicians are straddled with a task that shouldn’t be theirs, which is reading, processing, and writing documentation for patients. This task consumes 60-66% of a physician's time. While large language models (LLMs) trained on medical data are a promising start to reducing this bottleneck, they suffer from hallucinations, or the tendency for LLMs to invent information when they do not know the answer to a question. In the context of healthcare and diagnoses, this could be fatal. Our solution uses Retrieval Augmented Generation (RAG) to boost the capabilities of current LLMs, reducing hallucination while producing extremely accurate answeres to questions that would be commonly asked by a physician. Our mission is to treat patients with care, faster.

Data Sources

Since we used a pre-trained LLM in order to perform indexing and retrieval, and we also used a pre-trained LLM to perform generation, data was not needed to train a model. Instead, we used data to test our model. Specifically, we formed the basis of our testing through the use of the MIMIC-IV dataset. MIMIC-IV is a freely-accessible electronic health record dataset which does not contain PII, as identifiable information such as names, addresses, etc. have been removed. The MIMIC-IV dataset contains notes written by physicians, with key sections of each note including, but not limited to, prior health history, family history, symptoms, and discharge instructions.

Data Science Approach

We use Retrieval-Augmented Generation (RAG) to boost the performance of the LLM and lower rates of hallucination, greatly improving its feasibility for use in the medical industry. This RAG-LLM approach consists of the following:

- Indexer: create embeddings of each applicable note and index them accordingly.

- Retriever: given a system prompt and a question/prompt from a doctor, retrieve the most relevant indexed chunk. This similarity is calculated via cosine similarity.

- Generator: given the retrieved note chunk and the two prompts, generate a response to the question/prompt.

We perform extensive hyperparameter tuning, including selection of an optimal LLM for both indexing/retrieval and for generation, system prompt engineering, question transformation, and chunking strategy (i.e., chunking by section vs. chunking by size, choosing the size and overlap, etc.). In addition, we develop an evaluation framework based loosely on prior RAG evaluation metrics, and create a test dataset using MIMIC-IV as a basis.

Key Learnings and Impacts

- By optimizing the LLM for indexing/retrieval, we were able to boost the average performance of the retriever by 59%.

- By optimizing the chunking strategy (chunk size 1024, chunk overlap 100) we were able to further boost the performance of the retriever by 31%.

- The generator performed extremely accurately for yes/no questions.

- The generator performed well for vague questions.

- The generator was able to cite the exact line from which it retrieved the answer.

Acknowledgements

Thanks to our capstone instructors, Fred Nugen and Korin Reid, for their tireless assistance with our project and helpful feedback, assisting us in scoping our project and delivering our message.