CleanCAst

Problem & Motivation

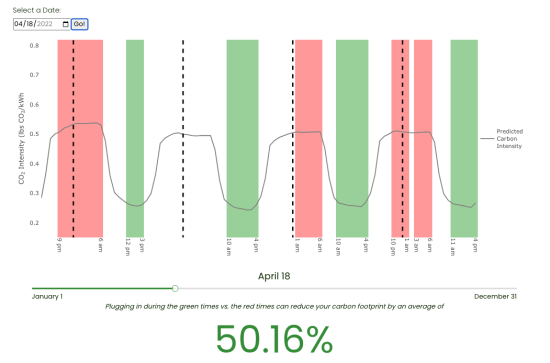

Long ago, when we mostly burned fossil fuels night and day to power our electricity grid, the carbon intensity, or the amount of CO2 emitted to produce that electricity, did not change very much throughout the day. But now, as renewables supply a significant and growing share of the grid, the carbon intensity of our electricity fluctuates more than ever with the availability of solar and wind power. Most people would choose to consume electricity when it is cleanest if they could, but the opportunity is magnified in California, where forward-thinking policies are driving the adoption of electric vehicles and accelerating the transition from natural gas to electricity for heating and cooking. Here, if consumers could switch their electricity consumption from the lowest carbon intensity times from the highest, they can cut their carbon footprint in half!

Demonstrating the feasibility of an accurate carbon forecast is the first step toward living in a cleaner world that maximizes the impact of California’s pro-climate policies. With CleanCAst, we show that, using modern data science tools, we can create a 96-hour carbon intensity forecast that meets or exceeds the state of the art in published papers.

Data Source & Data Science Approach

Data Sources

We built CleanCAst using a data pipeline that aggregates data from the following sources:

- The U.S. Energy Information Administration (EIA) supplied actual historical hourly generation data by source (natural gas, solar, nuclear, etc.) for the entire California grid, and total CO2 emissions for each source.

- The National Center for Atmospheric Research (NCAR) Research Data Archive supplied historical hourly weather forecasts for each latitude and longitude at increments of 0.25° via the NCEP GFS 0.25 Degree Global Forecast Grids Historical Archive ds084.1, which we matched to centroids of CEC resource regions below.

- California Energy Commission supplied maps of Solar Resource Areas and Wind Resource Areas, as well as generating capacity of those regions. The geographic centroids of each solar region were calculated from shapefiles and capacity-weighted weather and solar radiation forecasts were incorporated into the pipeline.

- The Sunrise Sunset API supplied exact sunrise and sunset times in UTC for each day, based on orientation of earth relative to the sun, for each solar resource area.

Model Architecture

Our forecasting model is centered around a Darts implementation of LightGBM—a gradient boosting framework that uses a tree based machine learning algorithm adapted for time series forecasting. A few key advantages include fast training speed, high efficiency, low memory usage, accuracy, and capacity to handle large-scale data.

Model Inputs

Target Series

- Carbon Intensity: The EIA dataset includes hourly historical carbon intensity in pounds per kilowatt hour, so we use these historical numbers as our target series for our model.

Past & Future Covariates

-

Past covariates refer to variables measured in the past that impact the target series we are modeling — for example, the historical net generation of solar, wind, and natural gas or the historical demand for electricity.

-

Future covariates are variables that hold information about the future at the time of prediction. Temporal attributes, such as the hour of the day or solar generation forecasts, are examples of future covariates. To improve our model, we created net generation forecasts for the different energy sources using the LightGBM modeling framework before predicting the carbon intensity.

-

Temporal attributes, like the year, month, day of the week, and week of the year, can be used as past and future covariates.

Data Split

We trained our LightGBM model on our target series of carbon intensity and leveraged EIA data from July 1, 2018 to December 31, 2020. We used the EIA data from January 1, 2021 to December 31, 2021 to validate our model using an expanding window time series validation framework (see the Model Training section below). After feature engineering and hyper-parameter tuning, we retrained the model on the July 1, 2018 to December 31, 2021 data and ran our finished model to predict carbon intensity for all of 2022.

Model Design

Our EIA dataset had hourly records of energy generation and consumption by source—the amount of wind, solar, natural gas, etc., produced and consumed across the grid. As mentioned above, to make the most accurate forecast, the model used these known values from the past (past covariates) and projections about what those generation figures might be in the future (future covariates). These future covariates are intermediate forecasts that improve accuracy but also introduce design complications. As a result, we created a composite 96 hour forecast consisting of a regular 96 hour forecast that excluded energy forecasts as future covariates and a 24 hour forecast that included energy forecasts as future covariates. This 24 + 96 structure proved effective and enabled us to avoid time-leakage problems while working within the constraints of our model architecture. See Model Construction below for implementation details.

Model Construction

Step 1: 24 Hour Forecasts

- We first generate 24 hours of predictions every 24 hours and include future covariates like the net generation forecast of natural gas or solar energy production. The net generation forecasts for natural gas and solar are possible because a 24 hour forecast made every 24 hours does not create duplicate values for each timestamp.

Step 2: 96 Hour Forecasts

- For the 96 hour forecasts, we cannot include the net generation forecasts as future covariates. This is because 96 hour predictions created every 24 hours produces duplicate forecasts for each timestamp.

Step 3: 24 + 96 Hour Forecasts

- To create the best set of model predictions, we use the 24 hour forecasts that include the net generation forecasts as future covariates to overwrite the first 24 hours of predictions from the 96 hour forecasts.

To examine the model’s performance during training and testing, we used an expanding window cross-validation strategy with retraining every month to generate carbon intensity forecasts.

Evaluation

To examine the performance of the model during training and testing, we utilized an expanding window cross-validation strategy with retraining every month to generate carbon intensity forecasts. We use average mean absolute percentage error (MAPE) to measure the accuracy of our model.

In more practical terms, MAPE represents the difference between the dotted line of the forecasted carbon intensity relative to the solid line of the actual carbon intensity. Since there are 365 days in our test set in 2022, we have 365 MAPE scores (depicted in the Model Construction image above as MAPE1, MAPE2, MAPE3,...MAPEn) that we combine to create our performance metric of average MAPE .

Key Learnings & Impact

Our 96 hour forecasts predicted the carbon intensity of the California electric grid with a mean MAPE of 7.97%, which represents an improvement on leading academic studies in the field on this topic. In practice, this means we forecasted the carbon intensity to within 8% of the actual carbon intensity.

Comparing our forecasts to one another, while the shorter interval forecasts were more accurate, model degradation was relatively modest for longer forecast intervals, and the median average MAPE was 7.2%, indicating that half of our forecasts predict within a 7% margin of true value, and a few substandard forecasts account for a higher average MAPE. The forecasts made during the first 24 hours are accurate to within 6% of the actual carbon intensity level.

The model performed well at times when natural gas, solar, and wind generation was similar from day to day, increasing at a linear rate with regular fluctuations, or decreasing at a linear rate with regular fluctuations. Dramatic changes, especially in wind or natural gas generation (either increases or decreases) contributed to some of the worst performing forecasts.

For our time series enthusiasts: Additional error analysis, with time series analysis techniques like the ACF, PACF, and Ljung-Box Test of the residuals, reveal non-white noise errors. However, the predicted values are close enough to the real values to be practically useful, and the CleanCAst results are competitive with other publicly available carbon intensity forecast models.

Acknowledgements

We'd like to thank our instructors, Cornelia Ilin and Alberto Todeschini, for their guidance throughout this project.