Eye Robot

Problem and Motivation

Individuals with visual impairments encounter numerous daily challenges that significantly impact their quality of life. These challenges often limit their visual abilities and awareness of their surroundings, necessitating adaptations in their lifestyle, such as memorizing paths and the placement of objects in their homes. The five main challenges faced by visually impaired individuals include mobility, employment, education, accessibility, and autonomy. We will discuss these challenges in further detail:

- Mobility: The National Institutes of Health reports that approximately 3% of all U.S. adults have a physical disability due to vision impairment, which restricts their ability to perform tasks independently and enjoy a higher quality of life.

- Employment: Only 44% of Americans with visual impairments are employed. This leaves a significant 56% seeking employment opportunities and a chance to achieve the American Dream.

- Education: According to the Center for Disease Control and Prevention (CDC), about 6.8% of children under 18 years have undiagnosed eye and vision conditions. These conditions prevent them from receiving the same educational opportunities and experiences as their peers.

- Accessibility: The CDC also notes that individuals with vision loss are more likely to suffer from other health issues such as strokes, falls, cognitive decline, and even premature death.

- Autonomy: The National Center for Health Research states that 1 out of every 4 blind adults in the U.S. faces the challenge of living independently.

To empower individuals with visual impairments, we have developed "Eye Robot," an innovative application designed to facilitate navigation in indoor environments. Eye Robot harnesses the power of real-time object detection, combined with LiDAR technology, to accurately measure the distance to detected objects. What sets our application apart is its use of audio cues, which provide an intuitive and user-friendly means for users to orient themselves and navigate through various indoor settings.

This approach not only enhances the autonomy of visually impaired individuals but also integrates seamlessly into their daily routines, enabling them to move more confidently and independently. By leveraging advanced technology in a practical and accessible manner, Eye Robot aims to significantly improve the quality of life for those with visual challenges, offering them a new level of freedom in their personal and professional environments.

Model

To optimize object detection in our mobile app "Eye Robot," we have integrated a state-of-the-art Convolutional Neural Network model known as YOLOv8, developed by Ultralytics. Originally, this pre-trained model is capable of recognizing 80 different classes in both indoor and outdoor settings. However, to tailor YOLOv8 specifically for our application's indoor use cases, we employed transfer learning techniques. This process involved freezing certain pre-existing layers of the model and training it on a new, hand-picked class dataset.

Our approach to evaluate the model's effectiveness involved utilizing the mean average precision (mAP) score. The mAP score is a crucial metric that measures a model's ability to detect all labeled objects in an image accurately. For the training process, we utilized an AWS EC2 instance, which allowed us to fine-tune various hyperparameters such as learning rate, optimizer, freezing layers, and batch size on 10% of the dataset.

Through this process, we discovered that freezing 10 layers, utilizing a batch size of 24, setting a learning rate of 0.01, and employing the 'Adam' optimizer yielded the most favorable mAP score. With these optimized hyperparameters, we proceeded to train the final model on 100% of the dataset. This rigorous development and evaluation process ensures that "Eye Robot" is equipped with a highly efficient and accurate object detection system, enhancing the user experience for visually impaired individuals in indoor environments.

Minimum Viable Product (MVP)

Our Minimum Viable Product (MVP) for "Eye Robot" consists of two versions of the mobile app, currently deployed on Apple TestFlight and awaiting official release on the Apple App Store. The app is available in two distinct versions: Eye Robot Lite and Eye Robot Pro. Both versions are equipped with our custom-trained YOLOv8 model, which we have fine-tuned from the original YOLOv8 architecture using our selected dataset for indoor use cases. This model is capable of detecting 96 distinct indoor objects, each with varying confidence scores. Both of our app versions run locally on device, hence collecting no data from users.

Eye Robot Lite Version: This version features a built-in text-to-speech function. The app generates bounding boxes around detected objects, displaying labels and confidence scores. It also provides corresponding audio cues to help users identify and locate these objects. This version is available for Apple iPhone 10 and ALL newer devices.

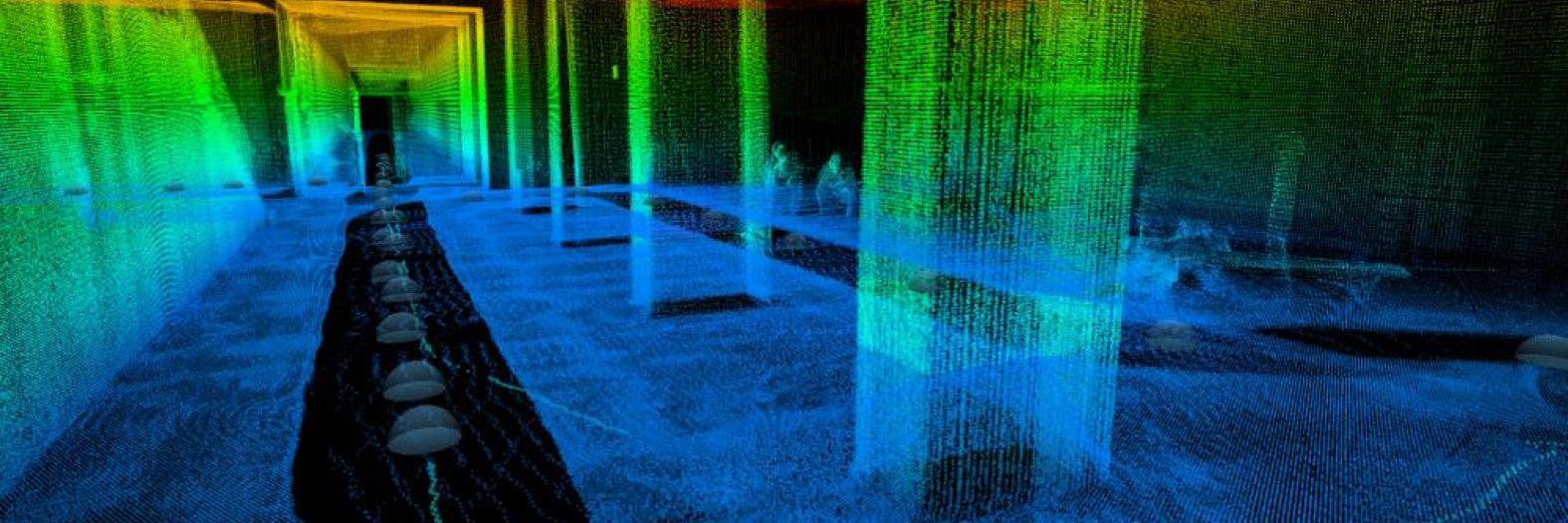

Eye Robot Pro Version: Building upon the Lite version, the Pro version includes a LiDAR add-on functionality. This feature measures the distance from the phone to the detected objects within the bounding boxes. In addition to generating bounding boxes and displaying labels, the Pro version also shows the measured distances and provides corresponding audio cues for both the identity and location of the objects. This is accomplished through sensor fusion of the iPhone's IMU and LiDAR sensors as well as optimization of the code so it can run effectively on the CPU, GPU and Apple Neural Engine (ANE) locally on device in real time. This version is available for the Apple iPhone 12 Pro/Pro Max and newer Pro series devices. This limitation to the Pro series is due to the inclusion of the LiDAR sensor.

The design of these apps is focused on enhancing the indoor navigation experience in real time on device for visually impaired users, leveraging advanced technology to provide a more intuitive and helpful tool for daily use.

Future Work

The current performance of our Eye Robot app's model demonstrates moderate effectiveness in detecting selected classes with varying confidence levels and mean average precision (mAP) scores. The final model has achieved an average mAP score of 0.45, with certain classes reaching as high as 0.95, while others fall below 0.1. This variation is primarily attributed to class imbalances within the dataset, coupled with limited resources for further training and optimization of the model.

Our future plans to enhance the model's performance include several key strategies:

- Hyperparameter Tuning: We aim to explore and fine-tune other model parameters, seeking to discover configurations that might yield better performance across all classes.

- Addressing Class Imbalance: To mitigate the issue of class imbalance, we intend to increase the training data for underrepresented classes. This could involve incorporating additional datasets or employing data augmentation techniques to enrich our existing dataset.

- Exploring FastViT: We are also interested in exploring FastViT, a potentially more efficient model. Due to previous constraints in documentation and time, we couldn't implement it in our current setup. However, it remains a promising avenue for future development.

- Optimizing App Performance: For the Eye-Robot Pro version, a significant focus will be on optimizing the LiDAR code. Our objective is to enhance CPU, GPU and Apple Neural Engine (ANE) usage even more efficiently to reduce battery drainage, thereby improving the overall user experience.

By implementing these improvements, we aim to not only enhance the model's accuracy and reliability but also to extend the app's usability and efficiency. These developments will contribute significantly to the app's goal of aiding visually impaired individuals in navigating indoor environments more effectively.

Acknowledgements

Our team is grateful to our Capstone Professors Cornelia IIin and Zona Kostic. We really wouldn't have been able to achieve what we did without their support, knowledge and encouragement during this journey. We are also grateful to Professor Rachel Brown for providing some guidance to help train our model.

We would also like to thank all of our classmates throughout MIDS and all the resources that helped us make this project viable.