LLM4LLM: Longer-Lasting Memory for LLMs

The Problem

This project tackles the critical issue of long-term memory retention in Large Language Models (LLMs). Despite their advanced capabilities, LLMs struggle with maintaining long-term context due to inherent token limits, leading to truncated information and loss of details. These models often suffer from information overload, hallucinate distant events, and exhibit high processing times for extended queries. These challenges hinder the seamless and accurate performance of LLMs in applications requiring consistent and reliable long-term interactions. Addressing these problems is essential to enhance the overall user experience and expand the practical applications of LLMs in various fields.

Solution

Our solution to the long-term memory challenges in Large Language Models (LLMs) involves augmenting conversations by storing key memory points in a structured database for on-demand retrieval. This innovative approach allows the LLM to maintain a more accurate and detailed context over extended interactions. By integrating a function-calling API with an SQL database, we ensure that critical information is retained and easily accessible, significantly improving the model's recall and accuracy. This method not only enhances the performance of LLMs in long-term applications but also maintains the seamless interaction experience users expect. Our solution is showcased through a Dungeons & Dragons-style storytelling assistant, which effectively manages player inventory and memory recall, demonstrating the practical benefits of this approach in various fields such as personal assistance, healthcare, education, customer support, and gaming.

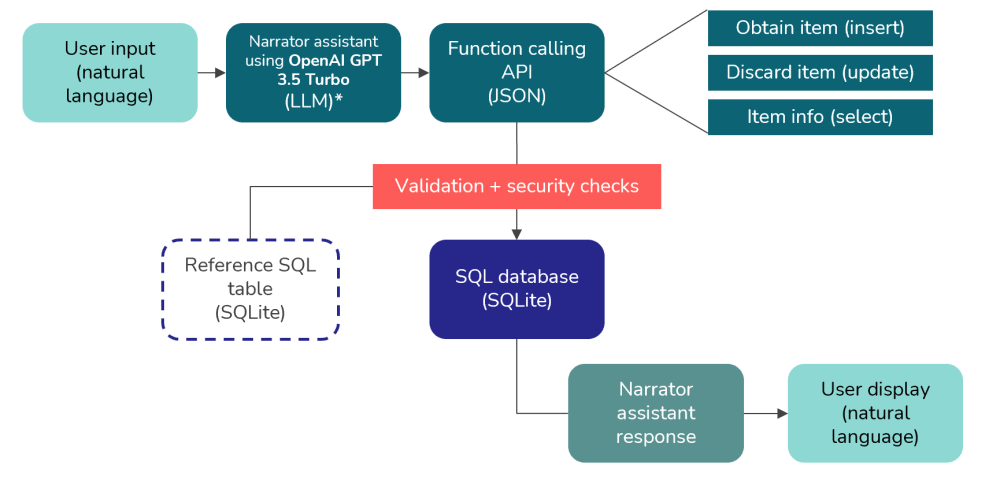

Model Architecture

The model architecture integrates a Large Language Model (LLM) with a structured SQL database to enhance long-term memory retention and retrieval. The architecture begins with user input in natural language, processed by a narrator assistant utilizing OpenAI's GPT-3.5 Turbo. This assistant interacts with a function-calling API that communicates with the SQL database, where key memory points are stored. The database supports essential operations such as inserting new items, updating discarded items, and selecting item information. This interaction ensures that memory points are accurately tracked and readily accessible, maintaining the narrative's continuity and consistency. The user receives responses from the narrator assistant, validated for accuracy and security, providing a seamless and enriched interaction experience. This architecture demonstrates its effectiveness through applications like a Dungeons & Dragons storytelling assistant, showcasing robust memory management for player inventory and interactions.

Evaluation

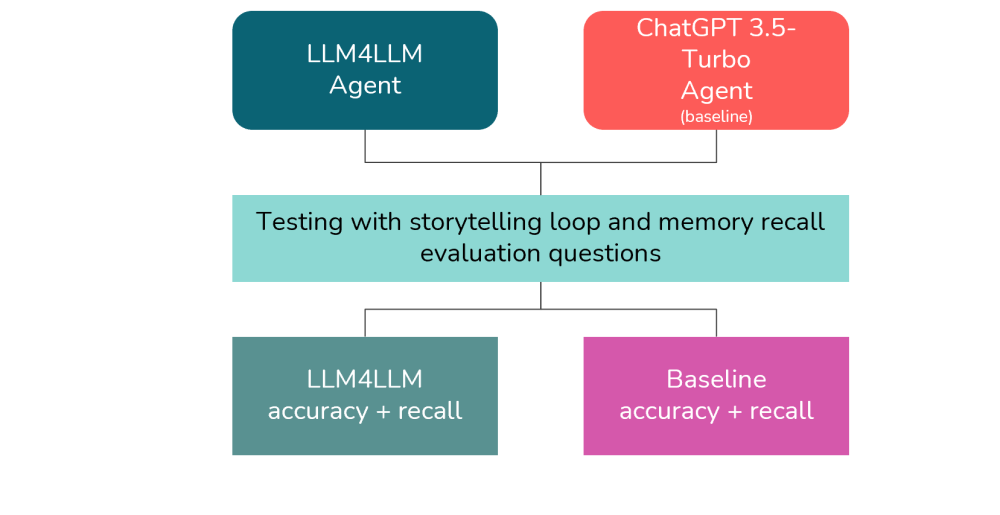

To evaluate our solution, we conducted a comparative analysis between our proposed LLM augmented with an SQL database (LLM4LLM) and a baseline LLM model. The evaluation involved a storytelling loop inspired by Dungeons & Dragons, where both models were tested on their ability to handle long-term memory tasks. Key evaluation metrics included runtime per round, accuracy in tracking the number of items in the player's inventory, and precision in identifying if specific items had been picked up or discarded.

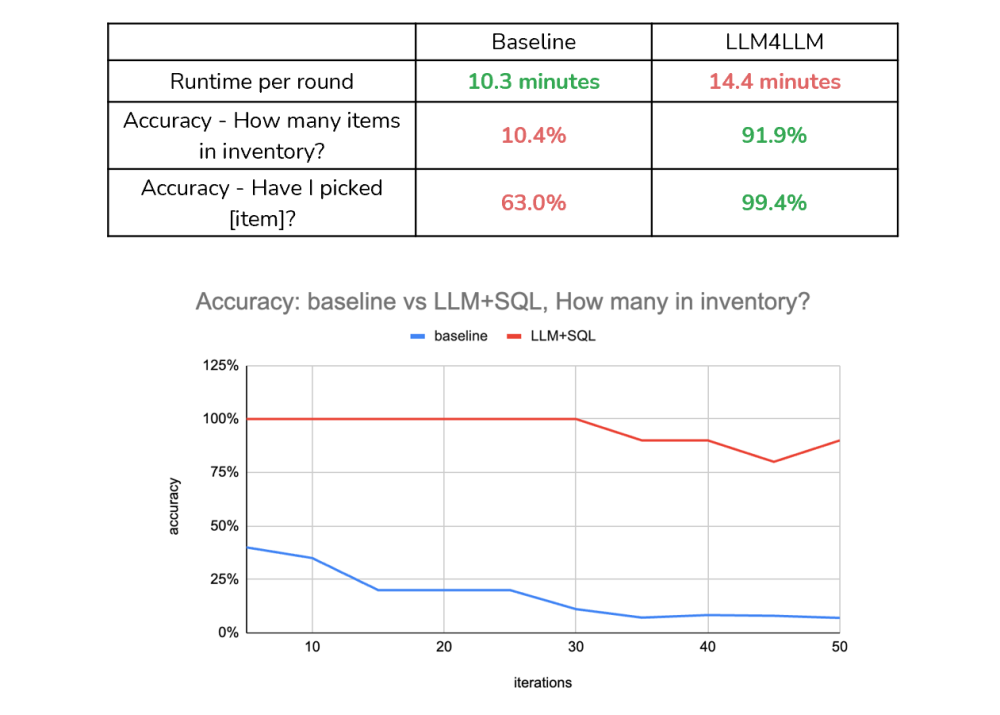

The results were compelling. The LLM4LLM model demonstrated a substantial improvement in accuracy, achieving 91.9% in correctly identifying the number of items in the inventory, compared to the baseline's mere 10.4%. In terms of recalling whether an item had been picked or discarded, LLM4LLM achieved an impressive 99.4% accuracy, while the baseline managed only 63%. Additionally, LLM4LLM maintained stable performance throughout extended interactions, accurately retrieving information from long-term memory even after multiple iterations.

Despite a higher runtime per round (14.4 minutes for LLM4LLM versus 10.3 minutes for the baseline), the trade-off was justified by the significant gains in memory accuracy and consistency. The baseline model's performance declined rapidly as the storyline progressed, failing to retain both short-term and long-term information accurately. In contrast, LLM4LLM's structured data storage approach ensured reliable memory retention, making it a robust solution for applications requiring long-term interaction and memory recall.

Key Learnings and Future Directions

Key learnings from our project underscore the remarkable advancements in accuracy and memory retention when integrating structured data storage with Large Language Models (LLMs). Our approach, which involves storing key memory points in a database for efficient retrieval, has demonstrated a significant enhancement in the performance of LLMs. This method not only maintains long-term context more effectively but also reduces the occurrence of hallucinations and the loss of important details over extended interactions. However, the project also revealed several challenges, such as the propensity for model hallucinations, instability of APIs, and the high resource costs associated with long-term data management.

Looking ahead, our future directions include the development of a robust, full-fledged API to improve stability and expand functionality, which is crucial for real-world applications. Additionally, conducting comprehensive academic research to delve deeper into the mechanisms of long-term memory in LLMs will be pivotal in refining our approach. We also aim to explore and implement this methodology in more complex and varied scenarios beyond our current applications, such as personal assistance, healthcare, and education. By addressing these challenges and pursuing these future directions, we aim to enhance the reliability, efficiency, and scope of LLMs, ultimately contributing to the creation of more sophisticated and dependable long-term conversational agents.