MedBot

The Problem…

Medical residents are overwhelmed by high patient loads, extensive documentation, and the rapid growth of medical literature, which diminishes patient care quality and resident well-being. Our team is introducing Medbot, a state of the art medical tool to tackle this problem.

Solution

Medbot is a tool to streamline physician workflow and better patient care. Medbot’s mission is to empower medical residents/physicians by providing innovative tools that streamline their workload, enhance patient care, and ensure they stay current with the latest medical evidence, ultimately improving both resident well-being and patient outcomes.

Data Pipelines

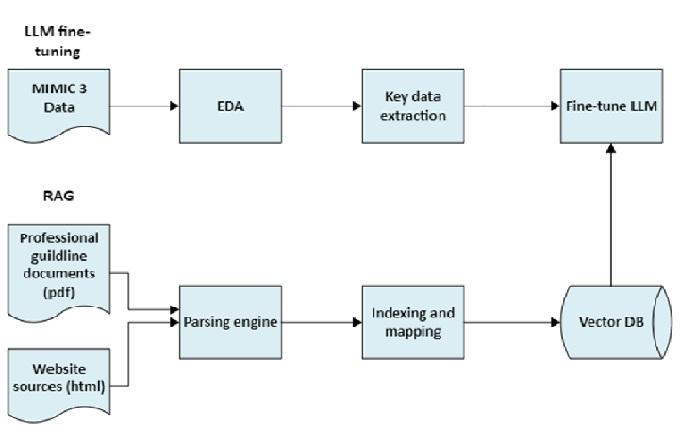

Medbot utilizes two specialized data pipelines:

- Diagnostic Data Pipeline: This pipeline is dedicated to diagnosis. It begins with Exploratory Data Analysis (EDA) on raw data, followed by extracting key values to fine-tune our Large Language Model.

- Treatment Recommendation Pipeline: This pipeline focuses on treatment recommendations. It leverages professional guideline documents and credible website information, performing parsing, indexing, and mapping to create a vector database. This database is then utilized in Retrieval-Augmented Generation.

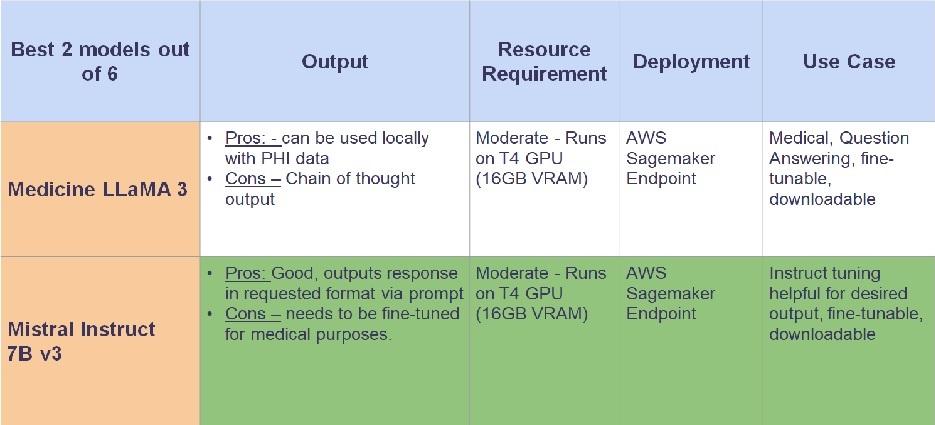

Our Model Selection

After a thorough evaluation of six models—BioGPT, FalconSaaI, BioMistral, Asclepius, Medical LLaMA 3, and Mistral Instruct 7B v3—we chose Mistral Instruct 7B v3 as our preferred model. Below is a table highlighting the top two models from our evaluation.

Evaluation Process

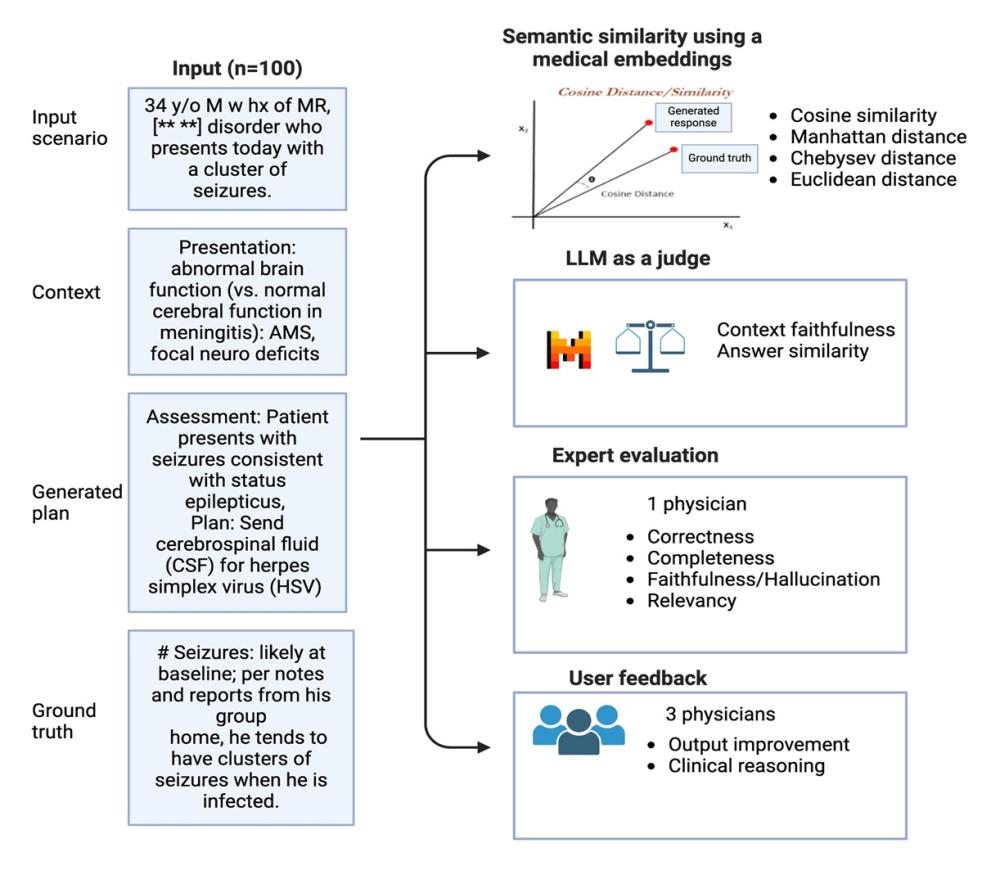

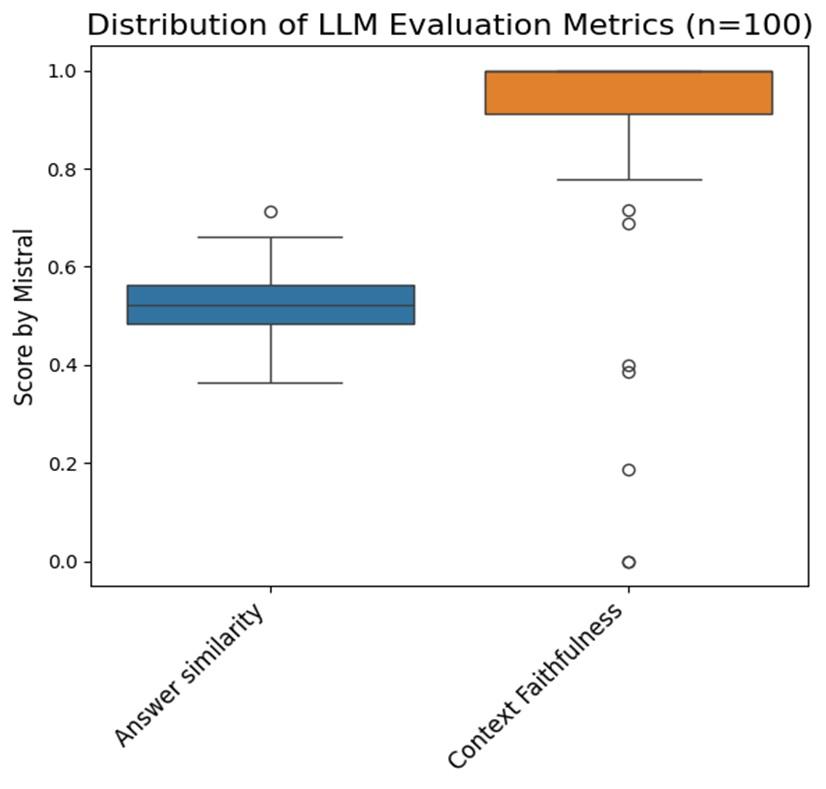

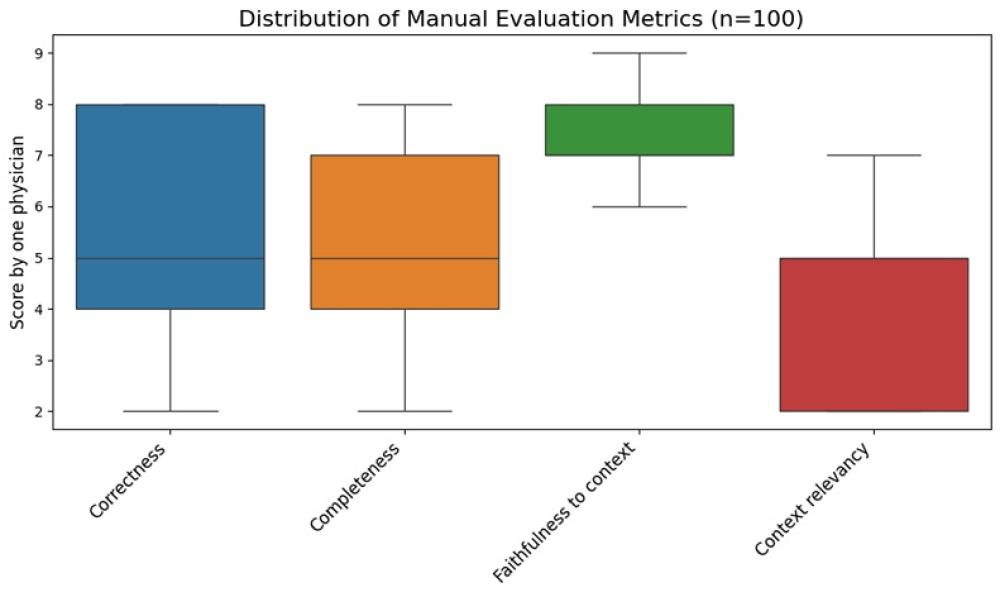

To ensure the accuracy and reliability of our model, we conducted an extensive evaluation using 100 input scenarios with context extracted via RAG (Retrieval-Augmented Generation), as illustrated below. Our evaluation focused on four key metrics:

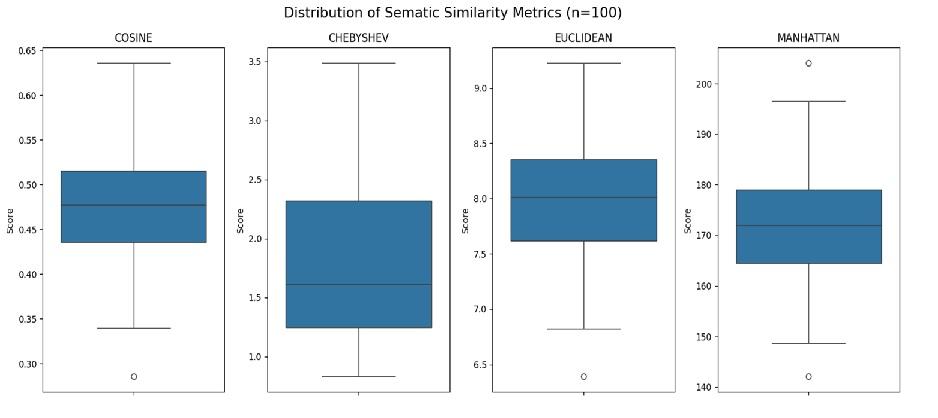

- Semantic Similarity:

We measured the similarity between the model's responses and the ground truth from the MIMIC-III dataset using metrics such as cosine, Manhattan, Chebyshev, and Euclidean distances. This helped us assess how closely the model’s answers aligned with expected outcomes. - Language Model as a Judge:

We employed the language model itself to evaluate context faithfulness and answer similarity, adding an automated layer of assessment to enhance the evaluation process. - Expert Review:

Brodie, an expert in the field, meticulously reviewed the responses for correctness, completeness, faithfulness, and relevance to ensure they met medical standards. - Physician Feedback:

We also engaged three physicians to provide insights on how to improve the model’s output and enhance its clinical reasoning capabilities.

This comprehensive evaluation approach allows us to thoroughly assess the performance and reliability of our model and product.

| Sematic Similarity Result | LLM Evaluation Result - Using RAGAS | Expert Evaluation Result |

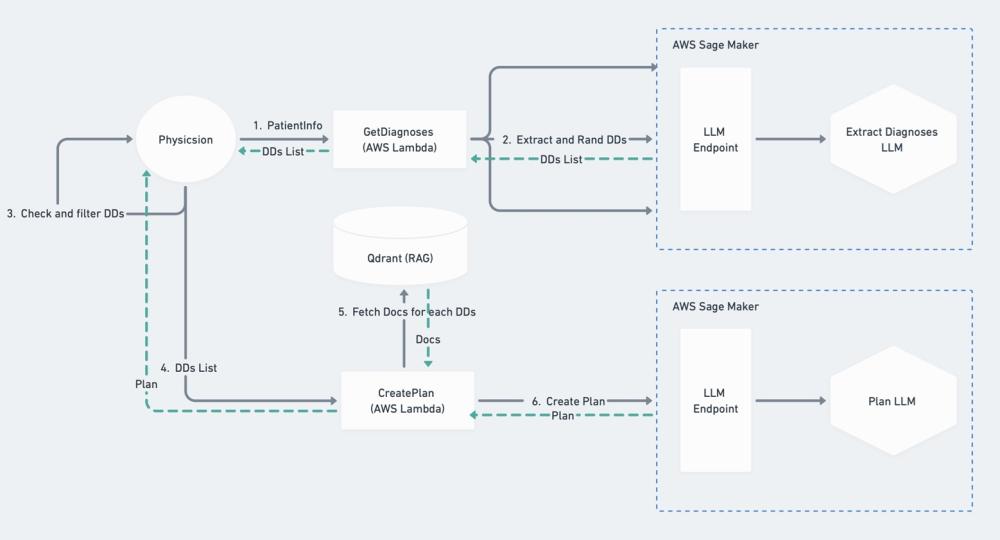

System Architecture Overview

This diagram provides a quick overview of our system's full architecture, highlighting the main components and how they interact. Let's walk through a typical request flow, moving from left to right:

- Physician Input:

The process begins with the physician entering information into the user interface (built with Streamlit) and clicking "Submit." This action triggers an internal API call to an AWS Lambda function, "GetDiagnoses," which prepares the request for our LLM (Mistral 7B) and extracts potential diagnoses. - Diagnoses Return:

The extracted diagnoses are then returned to the physician, who can use the interface to filter or edit the list of diagnoses. - Plan Generation:

When the physician clicks "Generate Plan," another request is sent to a different AWS Lambda function. This function calls the RAG (Retrieval-Augmented Generation) system to build the context and then calls the LLM to generate a treatment plan.- Step 1: Physician -> GetDiagnoses -> Mistral 7B, which returns a list of diagnoses.

- Step 2: Physician refines the diagnoses list using the UX.

- Step 3: Physician clicks "Generate Plan," triggering GeneratePlan -> RAG for context collection -> LLM for plan generation.

Trade-offs and Challenges

Handling sensitive patient information requires the utmost care. At Medbot, user privacy is our top priority, and we consider it at every step of development. For instance, this focus on privacy influenced our model selection—opting for a downloadable model over a hosted one to better protect user data. We also ensure that users have the option to opt-out of their data being used for training purposes.

Cost Considerations

Fine-tuning and hosting LLM models come with significant costs, so we strive to balance performance with affordability, ensuring that our solution remains both effective and cost-efficient.

Personalization vs. Privacy

We recognize that our current plans are somewhat generic, and we’re committed to finding innovative ways to make them more personalized. However, this must be done while safeguarding user privacy. One approach we’re exploring is the use of advanced prompting techniques to tailor recommendations without compromising data security.

Our goal is to deliver a more personalized experience for patients while keeping their privacy intact.

Key Takeaways

We began with a simple yet significant challenge that physicians face: spending less time with patients due to the overwhelming amount of documentation, notes, and guidelines they must manage.

Our mission was clear—to create a tool that helps physicians save time and allows them to dedicate more time to their patients.

The result is Medbot! As demonstrated, Medbot effectively addresses many of the challenges that physicians encounter.