The Moderation Machine

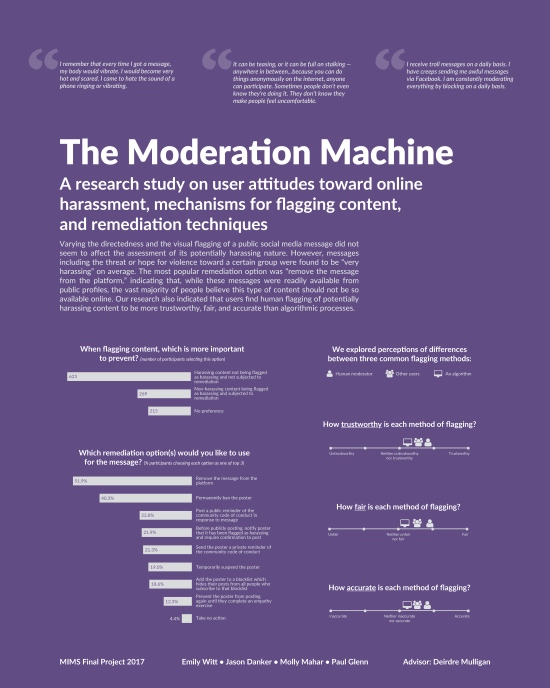

The “Moderation Machine” is a research study into user attitudes towards online harassment and mediation, as well as a tool that enables users of social media platforms to reflect on theirs and others’ values with regard to online harassment. It is designed to inform public discourse as well as the development and evaluation of content policies and technical solutions for harassment prevention and/or remediation steps.

The tool will promote reflection by asking users to make decisions and show them how their decisions compare to those of others who have used the tool, as well as to the content policies of various platforms, which will help them better understand the extent to which their values are shared or unique. Users will be asked to rate 10-20 pieces of possibly harassing content on a 1-5 scale from “Not Harassment” to “Definitely Harassment.” They will also be asked to explore a wider range of decision options than simply taking down or leaving up a comment, such as a mechanism that informs the user that they have posted content that could be considered harassing. Users will be able to consider how those decisions reflect values that are, could, should, or may be explicitly encoded into the design of tools that allow users to communicate publicly with one another and that allow platforms to moderate user-generated content.

Amongst users of social media platforms, we will promote reflection on gray areas that have room for interpretation and on the non-binary moderation decisions that are deliberated within even those platforms that have clear policies, as well as how these grays area are or may be handled by automated decision-making algorithms. The project concept and initial design was inspired by The New York Times’ comment moderation test and MIT’s Moral Machine.