What are Predictors of Medication Change and Hospital Readmission in Diabetic Patients?

Abstract

Utilizing a US Hospital encounter dataset of over 70,000 unique patients, we attempted to determine the predictors of medication change as well as readmission within 30 days, in diabetics who get admitted to a hospital. We employed extensive preprocessing including data cleaning, standardization, log transformations, data balancing and feature engineering. Modeling was done using logistic regression, decision trees and random forests. Abnormal HbA1c was found to be the strongest predictor (Log Coeff. 0.62) for odds of medication change, while number of medications in use was most important for tree based classification. Discharge to another unit in same hospital was the strongest predictor (Log Coeff. 2.37) for odds of 30-day readmission, while time spent in hospital and age of patient were most important in tree classification. For predicting medication change, logistic regression performed similar to decision tree and random forest (64% accuracy, 44% recall), whereas for predicting readmission, 94% accuracy and 90% recall was achieved with random forest using gini function.

Background

American hospitals spent over $41 billion on diabetic patients in 2011 who got readmitted within 30 days of discharge [1]. Researchers have attempted to find predictors of readmission rate [2] and among other factors, medication change upon admission has also been shown to be associated with lower readmission rates [3]. Medication change here refers to a change in glucose lowering therapy (typically intensification of insulin therapy) upon admission, which is now included in American Diabetes Association’s (ADA) guidelines. [4] Official recommendations from ADA suggesting that intravenous insulin therapy should be instituted in many patients up on admission, not just to control the hyperglycemia but also to reduce the morbidity and mortality it can cause in other chronic conditions. [4] The predictors of medication change could point towards interesting insights on what patient characteristics and admission conditions might influence whether physicians change their medication or not. According to the Hospital Readmissions Reduction Program which aims to improve quality of care for patients and lower healthcare spending, "A patient’s visit to a hospital may be constituted as a readmission if that patient is admitted to a hospital within 30 days after being discharged from an earlier hospital stay.” [5] Using a medical claims dataset we planned to answer the questions: what are predictors of medication change in diabetics who get admitted to a hospital? and which drugs and other predictors during the hospital stay, are associated with lower readmission rates?

Dataset

We used the de-identified diabetes patient encounter data for 130 US hospitals (1999-2008) [6] containing 101,766 observations that represent 10 years of inpatient encounters with over 50 features including patient characteristics, conditions, tests and 23 medications. Only diabetic encounters are included (i.e. at least one of three primary diagnosis was diabetes). Some key features are given in the table below.

Methods

We performed extensive pre-processing and some feature engineering steps to refine the feature set for modeling. Key steps are outlined below.

- New Feature Creation: We created three new features from existing data, including a composite service utilization metric of the patient combining past year's emergency, inpatient and outpatient visits, number of medication changes during encounter, and number of medications used during the encounter. Further, we recoded over 900 primary diagnoses into disease categories based on body systems.

- Feature Encoding: All categorical variables were encoded as dummies for further processing. Age, which was originally present as categorical, was converted into a continuous variable by taking mid-point of the categories.

- Collapsing of Multiple Encounters: We tried multiple techniques to consolidate multiple encounters for same patient e.g. considering more than 2 readmissions across multiple encounters as readmission for collapsed record, taking percentage of the medication changes across multiple encounters etc. However, taking the feature, “diagnosis” as our example, we found it not useful to combine them. We then considered first encounter and last encounter separately as possible representations of multiple encounters. However, last encounters gave extremely imbalanced data for readmissions (96/4 Readmissions vs No Readmissions) and thus, we decided to use first encounters.

- Log Transformation: Several numerical features such as number_emergency, service utilization, number_inpatient and number_outpatient had high skew and kurtosis, were log transformed when a skew or kurtosis beyond the limits of -2 ≤ skew and kurtosis ≤ 2.

- Interaction Terms: We identified possible candidates for interactions using a correlation analysis. Where one feature was duplicating the information contained in another, or was a derivative of another, we either dropped that feature or decided to test them independently. For the remaining situations (actual co-variance) we created interaction terms for modeling.

- Data Balancing: Data was highly imbalanced with respect to readmissions (only 10% records for 30-day readmissions), leading to high null accuracy. We therefore used systematic minority over-sampling technique (SMOTE) to oversample our underrepresented class of readmissions.

- Outlier Removal: Since our variables were nearly normal after log transformation, we assumed anything within 3 SDs on either side of the mean would include 99.5% of the observations, and we restricted our data to within 3 SDs for each numeric column.

- Standardization: Standardization was done for all numerical data by taking difference from the mean of each value, and dividing by the standard deviation.

- Creating feature sets for models: We created a complex feature set and a simpler one by replacing the detailed features with some composite features.For the first question (medication change as outcome), the detailed feature set had only 7 more variables, and hence we decided to use the detailed set only for this question.

Modeling

Our choice of models is governed primarily by our aim to understand the most important factors, along with their relative effects on medication change and readmission. Therefore, we did not implement models that have little or no interpretability (neural networks, support vector machines, nearest neighbors etc). The models that we implemented include the following.

- Logistic Regression: With the starting assumption that the impact of factors and their interactions can be modeled as a log likelihood of outcome, logistic regression helped us in understanding the relative impact and statistical significance of each factor on the probability of medication change and readmission. Missing values were either removed (when too many) or encoded as missing category. We tried both L1 and L2 regularization to penalize complex models and found little improvement (since we had made features normal or gaussian-like after log transformation). We also tried Randomized Logistic Regression to get the most important features for our logistic regression model but got poor performance metrics than the feature sets considered above and hence, we decided to stick with the feature sets above to maintain consistency.

- Decision Trees: By iteratively and hierarchically observing the level of certainty of predicting whether someone would need a medication change or not, (or would be readmitted or not) using both Gini Index and Entropy as attribute selection criteria, we decided the relative importance of different factors using a more human-like decision making strategy in establishing this determination.

- Random Forests: By considering more than one decision tree and then doing a majority voting, random forests helped in being more robust predictive representations than trees as in the previous case. For both Decision Trees and Random Forests, we removed the interaction terms from the feature set since these are already accounted for in tree based models.

Results

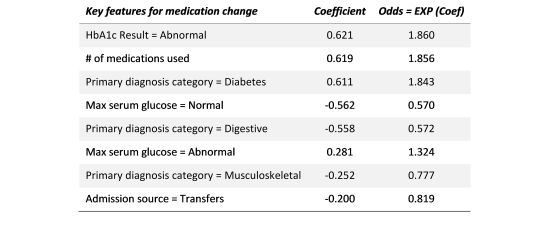

Question 1 - Logistic Regression

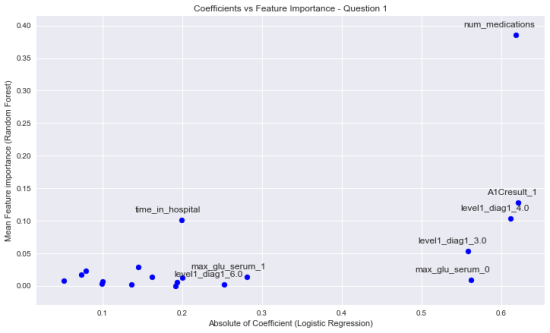

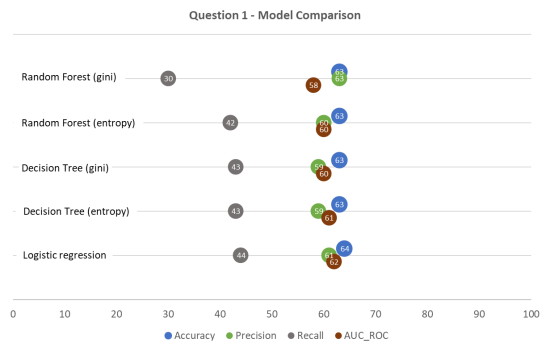

For medication change (Question 1), a Logistic Regression analysis was first conducted to predict medication change in admitted patients. A test of the full model against a constant only model (Log-Likelihood Ratio) was statistically significant(p<0.001), indicating that the features as a set can distinguish between medication being changed or not. Coefficients with sizes larger than 0.2 and p<0.01 are shown in Figure 1. For model performance, we tested using 20% test sample, and found Accuracy to be 64% (i.e. Classification error of 36%) with a weak Recall of 45%.

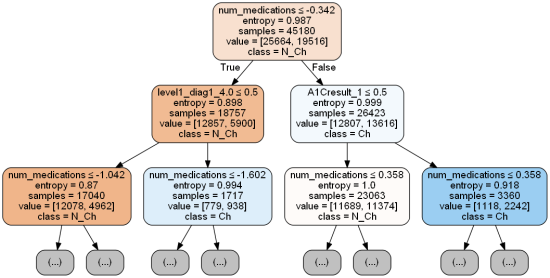

Question 1 - Decision Tree

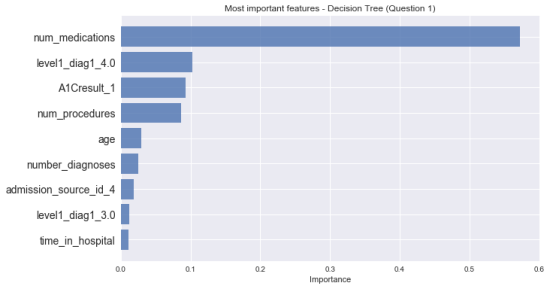

We found decision tree classifier to perform best at depth of 7, while minimum sample size for leaves did not affect much (kept at 10). For sake of simplicity, only first two-levels of this decision tree shown in Figure 2. Feature importances that were higher than 0.01 are plotted in Figure 3, showing Number of medications used with highest value of 0.57. This model gave a similar accuracy of 63%. Changing the information gain function to “Gini” yields almost the same results.

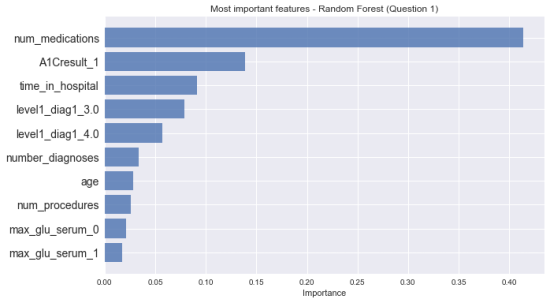

Question 1 - Random Forest

With Random Forest model, we achieved similar results with an accuracy of 63% regardless of using Gini or entropy function. Importantly, the top most important feature was still number of medications used, but after that the list of most important features differed (Figure 4).

Question 2 - Logistic Regression

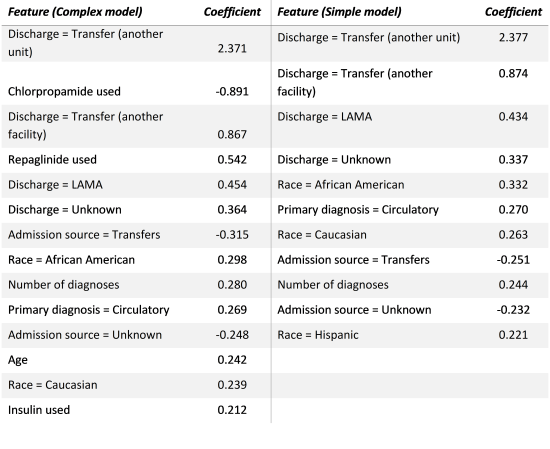

For readmission in 30 days (Question 2), a Logistic Regression analysis was conducted using the complex feature set to predict readmission. A test of the full model against a constant only model (Log-Likelihood Ratio) was statistically significant(p<0.001). Coefficients with sizes larger than 0.1 and p<0.01 are listed in Figure 6. For model performance, we tested using 20% test sample, and found Accuracy to be 61% (i.e. Classification error of 39%) with a Recall of 55%. A similar analysis using the reduced feature set was done, which gave a test accuracy of 61%. Importance coefficients for simple model are also given in Figure 6.

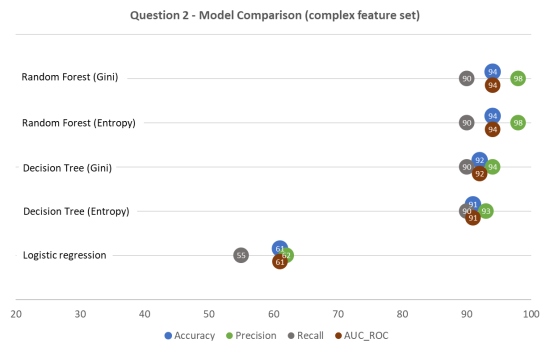

Question 2 - Decision Tree

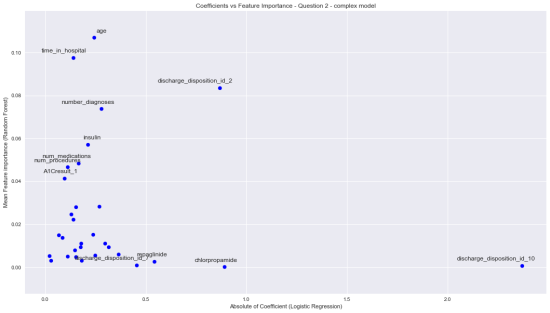

We found decision tree classifier to perform best at depth of 28, while minimum sample size for leaves did not affect much (kept at 10). Among feature importances higher than 0.01, time spent in hospital with highest value of 0.37. This model gave a much better accuracy of 92%. Changing the information gain function to “Gini” yields almost same accuracy of 92%.

Question 2 - Random Forest

With Random Forest model, we achieved an accuracy of 94% regardless of using Gini or Entropy function. However, the sequence of top most important features varied with these functions. Similar results were obtained for the simpler model as well.

Discussion

Regression coefficients and interpretation

As a general note for logistic regression coefficients reported, the interpretation is to be done while considering both the nature of logistic prediction (in terms of odds) and the transformations we have applied to the data before modeling. For example, in interpreting the effect of age on readmissions (coefficient = 0.19), we may follow these steps: o EXP (Coefficient of age) = EXP (Log of unit odds change) = EXP (0.19) = 1.21 o Age was standardized and 1 SD of Age = 15 years o Interpretation: For every 15 years increase in age, there is 21% increase in Odds of being readmitted versus not being readmitted

Question 1 – Medication Change as Outcome

When considering Medication Change as outcome, our logistic model indicates several predictors that are significantly associated with the outcome. The direction of all coefficients larger than 0.2 seem to make logical sense. For example, when HbA1c of patient is abnormal, then the odds of their medications being changed are 86% higher (reference class = Not tested for HbA1c). When the Glucose Serum test is normal, the odds of medication change are 43% lower (reference = not tested for serum glucose). The original study [2] did not consider medication as an outcome and hence comparisons cannot be drawn. However, the study did report higher chances of medication change when HbA1c testing was done compared to when not done at all. For decision tree model, interpretation is based on feature importance, which is a measure of overall information gain for a given feature. In our model, we found Number of medications, Primary diagnosis diabetes, and HbA1c result abnormal to be the most important features in classifying medication change. Interestingly, these three features also have the highest coefficients in the logistic model (albeit in different order). Figure 5 shows a good agreement between several coefficient sizes and importances from these two models at higher values.

With random forest ensemble, we looked at mean feature importances, and found that number of medications and abnormal A1c remain most important but time spent in hospital emerged as a third most important feature. It is important to note that feature importances are only relative to all other features in the model, and cannot be generalized to outside world. We also looked at overall model performance metrics such as accuracy, precision, recall and area under ROC curve, as shown in Figure 8. Accuracies for all models hover around 58%-64%, and all of them tend to produce a lot of false negatives (hence low recall).

Question 2 – Readmission as Outcome

For Readmission as outcome, logistic regression produced similar coefficients except that the complex model contained several drugs as predictors as well. The strongest predictors of readmission within 30 days appear to be four types of discharge conditions in both versions. Intuitively, these make sense – transfer to another unit in a hospital or another hospital indicates severity of disease and likely readmission. Interestingly, patients who Left Against Medical Advice (LAMA) are also likely to be readmitted, perhaps because their condition was not fit for discharge in the first place. Unfortunately, the original study clubbed discharge types into two categories only and comparison is not possible. However, the effects of race are indirectly in agreement (directionally at least). The reference study also did not include individual drugs in analysis and our analysis suggests that use of Repaglinide and Insulin increase the odds of readmission while Chlorpropamide usage decreases the odds. The usage of these drugs can be very situation specific and our analysis did not reveal any generic trends among families of drugs.

Our decision tree model applied to this question indicated highest importance of time spent in hospital, age and discharge to another hospital for both simple and complex versions. As shown in Figure 7, these importances do not correlate well with coefficient sizes from logit model. Since we used cross-validation and achieved similar test and train accuracy to avoid overfitting, this may suggest a different correlation structure of the variables in the model or lower explained variance in logistic model. For random forest ensemble, the same features had high importance, although the distribution was more even as compared to decision tree. This is likely due to stabilization of importances across many trees.

The overall model performance metrics for different models applied to Question 2 are depicted in Figure 9. The difference between performance of logistic versus tree based models is remarkable. Besides accuracy, recall is important here since hospitals get penalized and incur additional costs both for the patient and the insurance agencies if a patient expected not to be readmitted shows up in 30 days.

Limitations

Besides other factors mentioned above, the dataset does not include many important factors such as access to care, which has been shown in one study to account for 58% of variation readmission rates. [11] There may be many other factors depending on situation, that could be affecting readmissions. While the high performance is attributable partially to the synthetic data created using SMOTE, we can say that the performance would be around that, within conservative error estimates, for real life data. Undersampling would be a preferred method if large enough dataset is available. Both logistic models showed a poor Pseudo R-Squared value, which may point towards a lot of noise in the data but is not a good measure of fir for logistic models. However, the effects are still statistically significant based on the Likelihood Ratio tests for both.

Conclusion

Our study has three broad conclusions. First, appropriate pre-processing methods can significantly affect outputs of the models, and provide better insights into predictors of medication change and readmission, especially when handling imbalanced data. Second, in comparison with previous study using same dataset, we determined much more nuanced set of predictors and their effects, in an interpretable manner for example independent effect of abnormal HbA1c on medication change and effects of various patient discharge conditions on their readmission odds. Third, concerning outcome prediction, tree based models can outperform logistic regression when decision boundaries are non-linear.

References

1. Hines AL, Barrett ML, Jiang HJ, Steiner CA. Conditions with the largest number of adult hospital readmissions by payer, 2011: statistical brief# 172.

2. Strack B, DeShazo JP, Gennings C, Olmo JL, Ventura S, Cios KJ, Clore JN. Impact of HbA1c measurement on hospital readmission rates: analysis of 70,000 clinical database patient records. BioMed research international. 2014 Apr 3;2014.

3. Wei NJ, Wexler DJ, Nathan DM, Grant RW. Intensification of diabetes medication and risk for 30‐day readmission. Diabetic Medicine. 2013 Feb 1;30(2).

4. Moghissi ES, Korytkowski MT, DiNardo M, Einhorn D, Hellman R, Hirsch IB, Inzucchi SE, Ismail-Beigi F, Kirkman MS, Umpierrez GE. American Association of Clinical Endocrinologists and American Diabetes Association consensus statement on inpatient glycemic control. Diabetes care. 2009 Jun 1;32(6):1119-31.

5. The Hospital Readmissions Reduction (HRR) Program, Center for Medicare and Medicaid Services. Website: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Ins…

6. Diabetes 130-US hospitals for years 1999-2008 Data Set provided by Center for Clinical and Translational Research, Virginia Commonwealth University. Available at: https://archive.ics.uci.edu/ml/datasets/diabetes+130-us+hospitals+for+y…

7. Lipska KJ, Ross JS, Wang Y, Inzucchi SE, Minges K, Karter AJ, Huang ES, Desai MM, Gill TM, Krumholz HM. National trends in US hospital admissions for hyperglycemia and hypoglycemia among Medicare beneficiaries, 1999 to 2011. JAMA internal medicine. 2014 Jul 1;174(7):1116-24.

8. Futoma J, Morris J, Lucas J. A comparison of models for predicting early hospital readmissions. Journal of biomedical informatics. 2015 Aug 31;56:229-38.

9. Shameer K, Johnson KW, Yahi A, Miotto R, Li LI, Ricks D, Jebakaran J, KOVATCH P, Sengupta PP, Gelijns A, Moskovitz A. Predictive modeling of hospital readmission rates using electronic medical record-wide machine learning: a case-study using Mount Sinai Heart Failure Cohort. In Pacific Symposium on Biocomputing. Pacific Symposium on Biocomputing 2016 (Vol. 22, p. 276). NIH Public Access.

10. E. S.Moghissi,M. T.Korytkowski,M.DiNardo et al., “American association of clinical endocrinologists and American diabetes association consensus statement on inpatient glycemic control,” Diabetes Care, vol. 32, no. 6, pp. 1119–1131, 2009.

11. Herrin J, St Andre J, Kenward K, Joshi MS, Audet AM, Hines SC. Community factors and hospital readmission rates. Health Services Research. 2015 Feb 1;50(1):20-39.