Improving Context Based Thesaurus

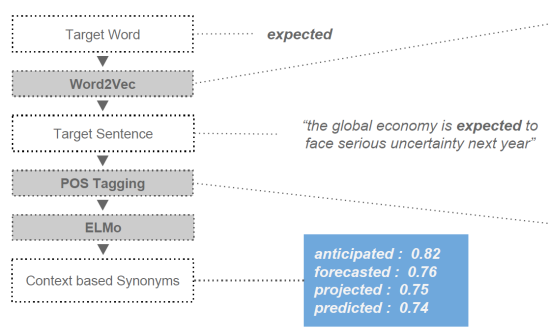

One way to capture semantic similarity between words is to use the popular word embeddings. Although these are generalized vector representations of a target word, they don't capture the local context that may be present around a target word. We build upon the word embedding model and improve it by using a context-aware bi-directional LSTM model with adaptive word embeddings, and evaluate the results compared to more traditional language models.