Emotion Detection at the Edge

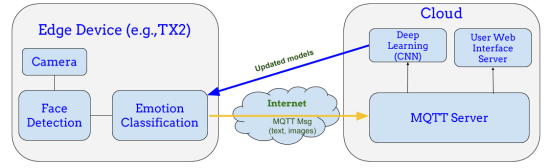

Those with Autism Spectrum Disorder (ASD) have difficulty with social communication and interaction, particularly detecting and classifying an emotion of the person with whom they’re interacting and reacting appropriately. Having the ability to receive information about the emotion of others can equip them with the tools to react most effectively. In this project, we built on open source facial emotion classification to bring it to the edge. The architecture of our solution allows inference to be performed at the edge with the images captured by a camera and has those images messaged back to the cloud to continue refining the model and to provide a user web interface.