Resilient Iris Recognition

Problem Statement

Artificial Intelligence and machine learning are becoming more prevalent in consumer technologies and throughout our global networks. Though obviously very useful, this powerful prediction capability also comes with a host of privacy and security concerns. For example, adversarial machine learning models are being used to perturb datasets in an effort to produce data misclassifications. These misclassifications can lead to errors in authorization results and can come in several flavors, with example attacks below:

General Misclassification vs Source/Target Misclassification

General Misclassification is defined by pushing the targeted model to misclassify the data without regard to what the original label was nor what the resulting label is. Source/Target Misclassification denotes the ability to direct the targeted machine learning model to label a data point as a particular label.

White-Box vs Black-Box

In a White-Box model, the attacker has access to the model and to the gradient (the error value of the prediction). In a Black-Box model, the attacker has no access to the target model. We will address a white-box approach first and explore the possibility of a black-box approach contingent upon the identified challenges.

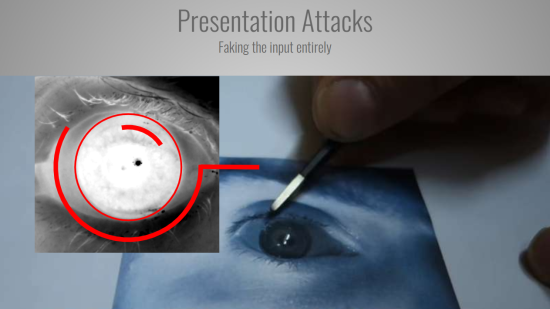

Presentation Attacks

Especially visual classification systems based on machine learning are prone to granting unauthorized access by recognizing learned patterns in artificial input data (i.e., printout of iris on a high-resolution color printer). Just like perturbations of the input data in adversarial machine learning attempts, mimicking of valid input data poses a high risk of subversion. Fake input sources will need to be considered in the pursuit of a robust visual authentication system.

Replay Attacks

With a replay attack the attacker intercepts the data sent from the sensor to the recognition system and replays it at a later date. This attack is also a threat to other authentication systems and can be addressed in the connecting protocol.

Project Objective

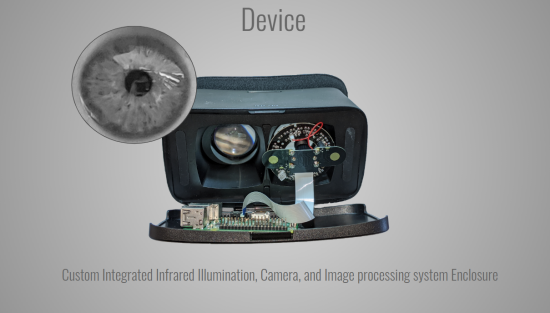

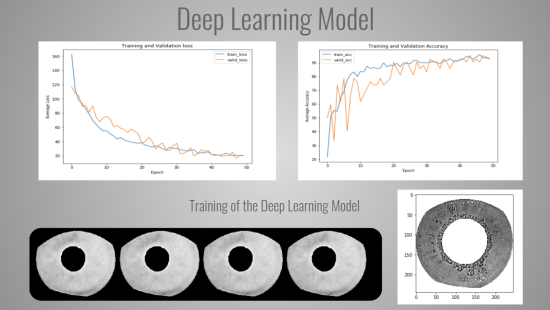

We will demonstrate how easy it is to perform an attack on popular machine learning models used for identity and access authorization. Additionally, we will present our research, design and proposed solution to such attacks, and demonstrate the hardware and software components of our risk mitigation framework.