BIASCheck

Problem & Motivation

BIASCheck is an end-to-end bias mitigation service that focuses on minimizing subjective biases in written language. BIASCheck defines subjectivity as "the quality of being based on or influenced by personal feelings, tastes, or opinions." Subconscious subjective biases are often embedded in everyday communications, even when a writer aims to be neutral. In a society that is more and more diverse and intersectional, the cultural dynamic has to adjust to prevent biases, marginalization and micro-aggressions in language. In other words, there is an imperative in our society to minimize bias, and even while many agree with this sentiment, there aren’t current tools available to automatically check one’s own biases in their writing. In the field of NLP, there aren’t any pre-existing great bias measurement tools. Existing solutions focus on toxic language or hate speech or on measuring bias in models directly. While subjective biases are more nuanced and difficult to detect, there is an opportunity to address them efficiently through fine-tuning pre-existing language models. BIASCheck aims to bridge the gap between current solutions and the nuance of these subjective biases to help writers become more objective in their communications. As such, primary target users are HRBPs and communication specialists seeking a bias mitigation tool. The market opportunity in this sector is vast as current existing solutions are centered primarily around less nuanced biases, and there is a lack of user-facing products.

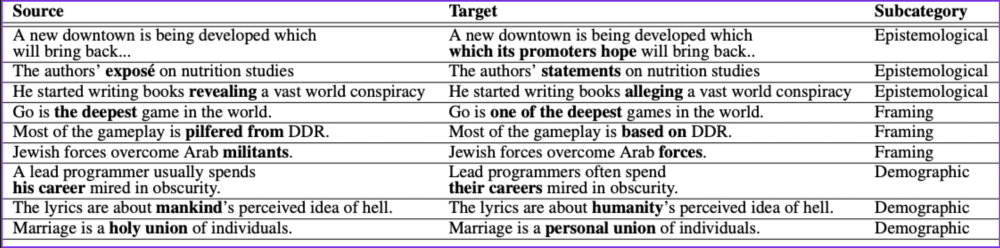

Data Source: Wiki Neutrality Corpus

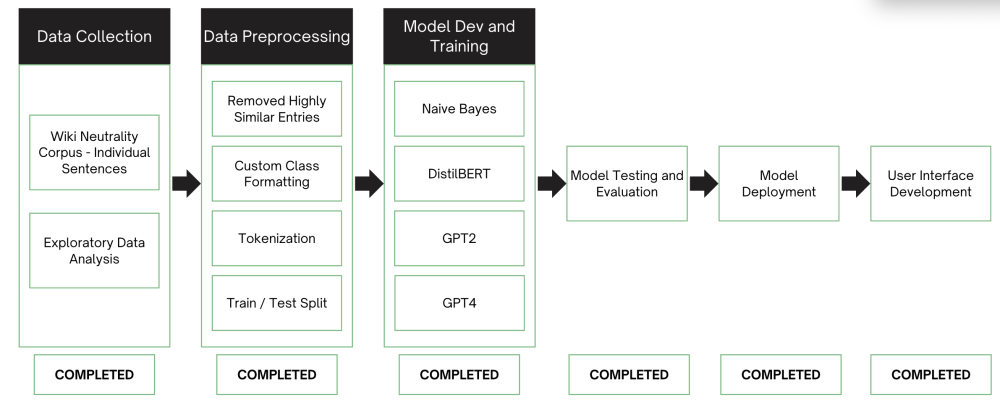

The primary dataset is the Wiki Neutrality Corpus (WNC), a collection of sentence changes made under Wikipedia's neutral point of view policy, containing over 180,000 pairs of sentences. The dataset can be found at this Kaggle page, and the paper that introduced the dataset can be found here. Although the data was relatively clean, exploratory data analysis revealed numerous duplicates which were subsequently removed. Additionally, cosine similarity was employed to identify and exclude records deemed too similar.

Above is a set of examples from the WNC. The dataset includes examples of framing bias as well as demographic biases, such as gendered and religious language. For the classification task, the Source and Target sentences were labeled as biased and neutral, respectively. For the neutralization task, the Source/Target pairs were retained together.

Data Science Approach & Evaluation

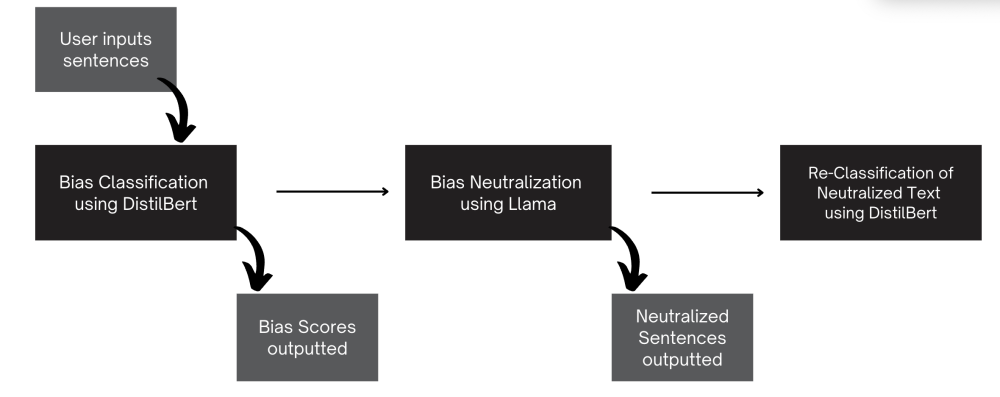

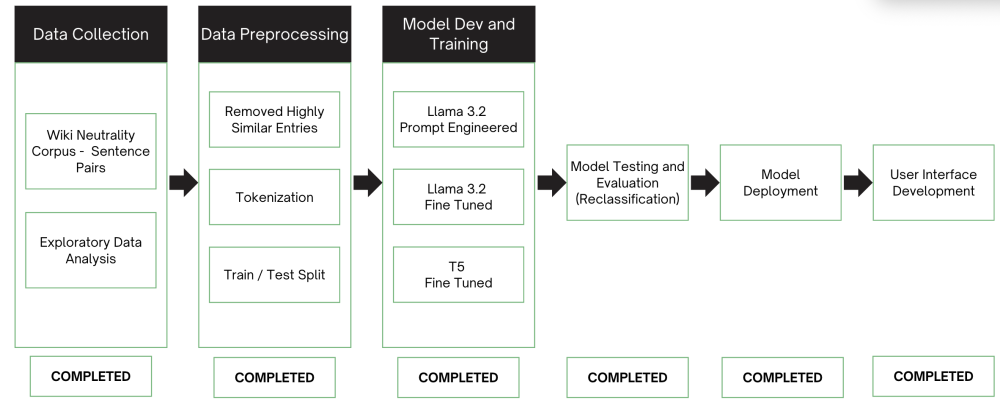

As an overview, BIASCheck is comprised of two models: a bias detection task and a bias neutralization task. The bias detection task is a classification model used to determine if inputted text is "biased" or "neutral", labeling text biased if the score is greater than or equal to 50%. If the text is biased, the text is neutralized in an LLM-based bias neutralization task. To qualify if the LLM did an adequate job, the output from the LLM is passed back through the classification task to test for bias. Below is an overview of our evaluation process flow:

Model 1: Bias Detection (Classification Task)

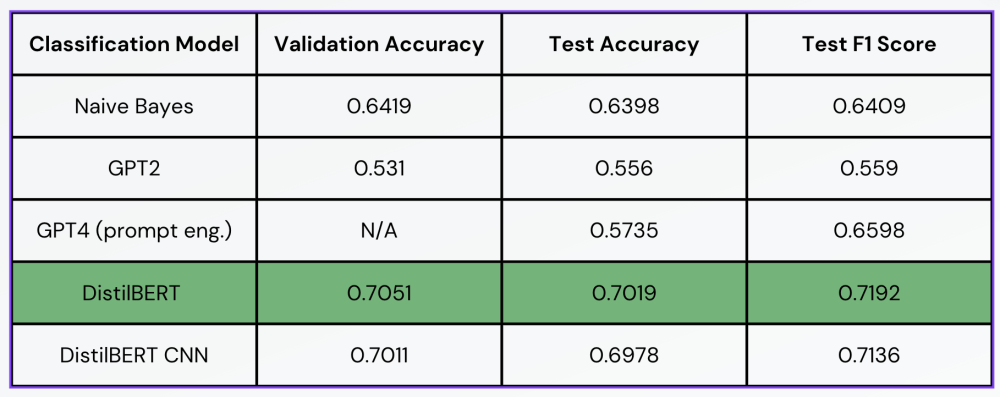

Exploratory data analysis revealed that there were roughly 10,000 duplicate and highly similar entries which were identified and removed using cosine similarity analysis. During preprocessing, the classes were reformatted for modeling purposes, and low-quality text material was eliminated. Data engineering efforts included evaluating various tokenizers, such as Spacy, to ensure appropriate tokenization. For model development, Naive Bayes and GPT-2 models (excluding the generative version) were implemented as baselines for binary classification and bias detection, with additional enhancement using Transformers.

In addition to Naive Bayes and GPT 2, GPT4 (through prompt engineering), DistilBERT, and DistilBERT CNN were evaluated for the detection task. In model evaluation, validation and test accuracy were used to qualify DistilBERT as the best model for detection with an F1 score of around 71% for test and validation. This model is CLS-token-based, includes one hidden layer, has a maximum sequence length of 512 to handle very long sentences, and was fine-tuned for three epochs. Priority was given to recall and F1 score to ensure the models effectively identify biased text. Although optimizing the F1 score by adjusting the threshold was attempted, it ultimately reduced the recall score, so this approach was not adopted.

In addition to testing conducted on the dataset, novel input examples were fed into the model to evaluate its response. Examples from the CrowS Pairs dataset, which contains stereotyped sentences, received highly biased scores. Subjective movie reviews were also tested and similarly produced biased scores.

Model 2: Bias Neutralization

The second task in BIASCheck is a bias neutralization component. The process flow for this task was similar to bias detection through preprocessing. We developed three models: a llama prompt engineered, a llama fine tuned, and a t5 fine tuned model.

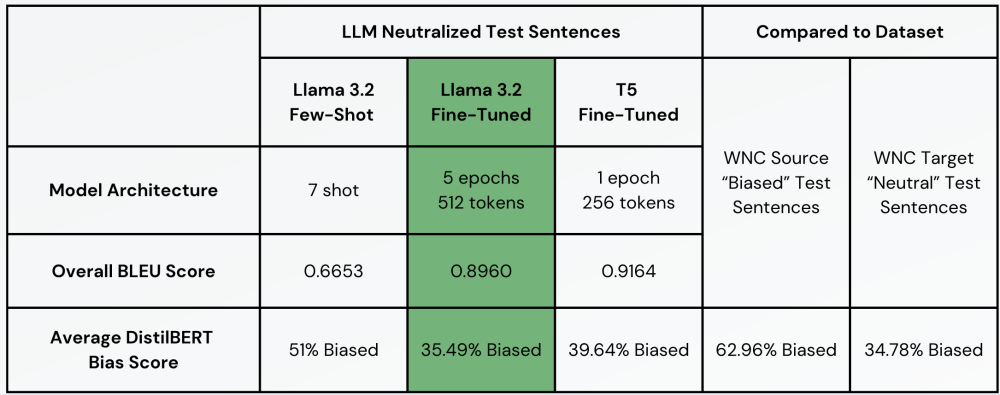

To evaluate the neutralization models, the test dataset was processed through each model, and the outputs were compared to the target neutral sentences in the Wiki Neutrality dataset. Evaluation metrics included overall BLEU scores and average DistilBERT bias scores, calculated by passing the neutralized sentences through the DistilBERT classifier. Additionally, the average DistilBERT scores of the dataset's source and target sentences were measured as a baseline. The fine-tuned LLaMA model achieved the best performance, with an overall BLEU score of 0.896 and an average bias score of 35.49%, closely aligning with the target baseline of 34.78%.

Additional testing was completed with our user group. They provided adequate feedback on both model outputs and the application's interface.

Key Learnings & Impact

The model excels at detecting and neutralizing subjectivity, particularly when it is expressed through a personal voice, superlatives, or qualifiers. However, sentences that are just over the bias threshold are sometimes neutralized and sometimes left unchanged, especially when the bias stems from specific vocabulary rather than superlatives or qualifiers. This inconsistency highlights an area for further refinement. An interesting observation is that the tool is sensitive to punctuation. Sentences ending with an exclamation point are significantly more likely to be classified as biased. Significant experience was gained in developing a deployment system utilizing AWS, Streamlit, and Hugging Face. Despite limited prior expertise in this area, the process provided valuable data engineering experience. While there are still areas for improvement, the foundation has been established for future refinement. Several optimization strategies were explored, including the use of lightweight distilled transformer models, implementation of Unsloth (an open-source efficiency tool) for fine-tuning LLMs, parallelization, and quantization. These techniques improved the training process; however, technical constraints related to time, budget, and GPU availability remained a challenge.

Final Goals & Wrap-Up

Efforts will focus on refining the models and enhancing the efficiency of the deployment system. Comprehensive and rigorous testing will be conducted, including additional edge case evaluations and more formal qualitative user testing sessions. The tool will be expanded to serve broader user segments, including industries such as advertising, journalism, and politics. The ultimate goal is to adapt the tool to meet the needs of anyone seeking to minimize bias in their written text.

Acknowledgements

BIASCheck would like to thank the domain experts (HR professionals) who interviewed with us and tested our product.

BIASCheck would also like to thank our professors, Korin Reid and Puya Vahabi, for their help and guidance.