CuneiTranslate: Unlocking Ancient Mesopotamian Knowledge

Project Overview

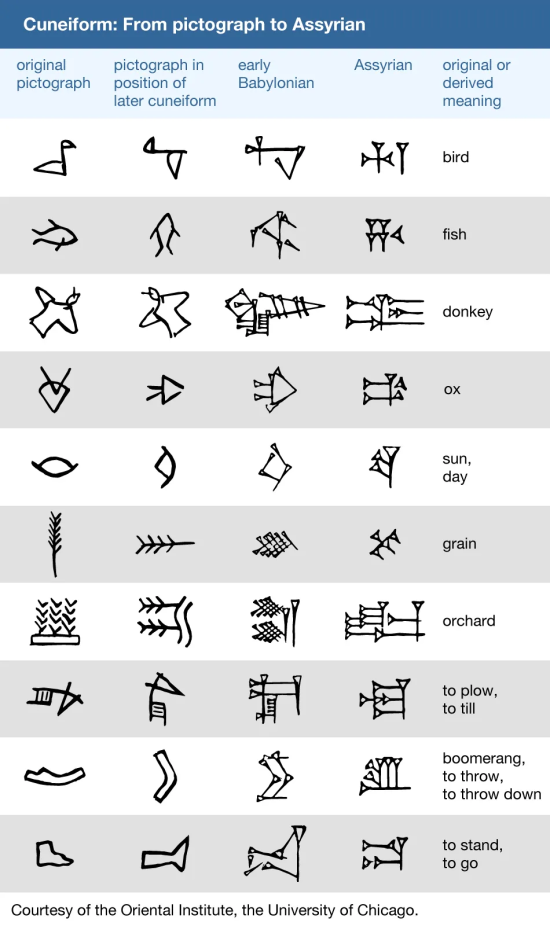

The Cuneiform Translation Project aims to address the significant gap in translating cuneiform tablets from ancient Mesopotamian civilizations. With over half a million untranslated tablets in museums worldwide, we’re working to unlock vital information about the development of early Western Civilizations.

By leveraging neural machine translation technology and collaborating with domain experts, we hope to overcome the current bottleneck in Middle Eastern studies and make these important artifacts accessible to the broader academic community.

Problem & Motivation

The project aims to address the significant gap in translating cuneiform tablets from ancient Mesopotamian civilizations. Despite the historical significance of these tablets, which contain vital information about the early development of Western civilizations, there are too few scholars proficient in ancient Mesopotamian languages and cuneiform script to undertake the translations at scale.

The successful development of a reliable, AI-driven cuneiform translation model would allow archaeologists, historians, and researchers to translate texts at scale, dramatically accelerating scholarly research. This would increase accessibility to ancient artifacts, not only for academic use but also for the general public through museum exhibits and online resources.

Technical Approach

Data Sources

Our project drew on two data sources: ORACC and the FactGrid Wikibase. ORACC comprises the largest open-source repository of expert academic translations for cuneiform texts, providing line-by-line translations between the transliterated cuneiform texts (a phonetic, latin alphabet representation of the tablet) and english. The FactGrid Wikibase has the largest database of Sumerian and Akkadian lexemes mapped to all their English senses (meanings).

Why combine these two sources? Because of the extremely low-resource nature of the cuneiform translation project, it is important to find high-quality sources to augment the training data. The tokenizers and models can be trained on a much larger vocabulary by exploding lexemes to their multiple senses, improving translation performance.

Modeling/Evaluation

We implemented several models and tokenizers for this project. The main tokenizer used was a PyTorch BPE (byte-pair encoding) tokenizer, a subword tokenizer shown to be effective in low-resource language settings. This tokenizer was then paired with several models: T5, mT5 (multilingual T5), Helsinki NLP’s opus-mt-ar-en (an arabic to english model) and Meta’s NLLB (No Language Left Behind) model. Two custom models were implemented using tensorflow, including a Encoder-Decoder RNN with Attention, and a transformer model. No model achieved a BLEU score higher than 8%, indicating fundamental limitations to training accuracy given the dataset.

Infrastructure

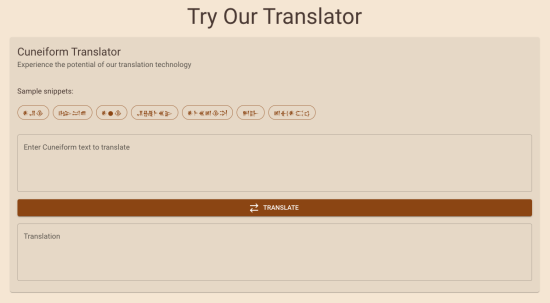

The models were trained on a virtual machine using AWS, then published to HuggingFace. We implemented a FastAPI application with endpoints that seamlessly integrate models from Hugging Face and delivers model translations via our front-end UI, which was created using React.

Key Learnings & Impact

Through our efforts to utilize open-source models, we ran into significant challenges with tokenization and training on cuneiform data. In addition to a limited training corpus size, cuneiform scripts and characters are highly specific. Open-source models, tokenizers, and pre-processing tools lack the flexibility required for working with such a specialized script, which is a valuable insight for future work on this problem. Additionally, in such a low-resource setting, maximizing dataset size required combining cuneiform texts from different languages and periods. While larger datasets typically yield performance gains, in this case, mixing languages and time periods introduced inconsistencies that failed to improve predictions. This suggests that further attempts to build translation models should consider experimenting with corpuses segmented by language and time period. Finally, project impacts the landscape for this research area by setting performance baselines for translation models with transformer and self-attention architectures.