EmergEye: AI-Based Car Accident Detection and Notification System

Problem & Motivation

EmergEye addresses a critical problem in emergency response: delays in detecting and responding to traffic accidents. This issue is especially prevalent in rural areas or less-monitored regions where accidents may go unnoticed for extended periods. Delays in emergency response can lead to increased fatalities and more severe injuries. EmergEye aims to bridge this gap by using real-time video analytics to detect accidents through CCTV cameras and provide timely notifications to emergency responders, thus reducing response times and potentially saving lives.

Overview: Data Source & Data Science Approach

The project utilizes real-time video feeds from traffic cameras and custom datasets generated for accident detection and severity classification. The approach incorporates:

- Deep Learning Models: MLP for accident detection, trained on curated datasets; YOLOv11 for severity classification.

- Computer Vision: OpenCV for real-time video processing.

- Multimodal Machine Learning: Integration with Qwen-VL for generating detailed accident reports.

- Cloud Infrastructure: AWS/GCP for scalable video analysis and automated notifications.

- Innovative Pipeline: The end-to-end pipeline includes preprocessing (video stream handling), accident detection, and classification with notifications for severe accidents.

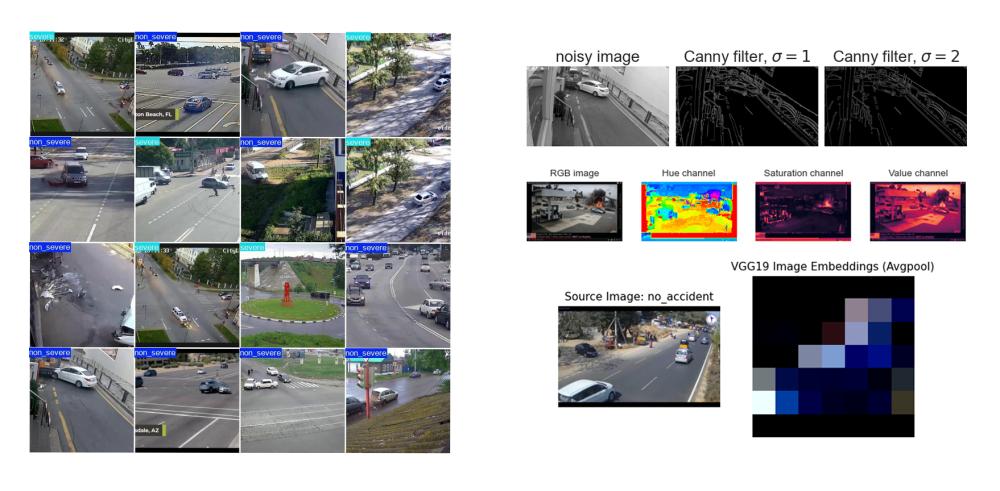

Training & Results: Enhanced Visual Processing

Our image processing pipeline is meticulously designed to ensure accurate and efficient feature extraction, a critical step in identifying severe accidents. Leveraging deep learning models like ResNet and VGG, we analyze complex visual patterns and details to detect key indicators of accidents. Additionally, advanced edge detection techniques are employed to capture structural changes and sharp contrasts, helping to distinguish potential accident scenes from routine activity. Together, these methods create a robust system that interprets CCTV feeds in real time, enabling rapid, reliable detection in even challenging visual conditions.

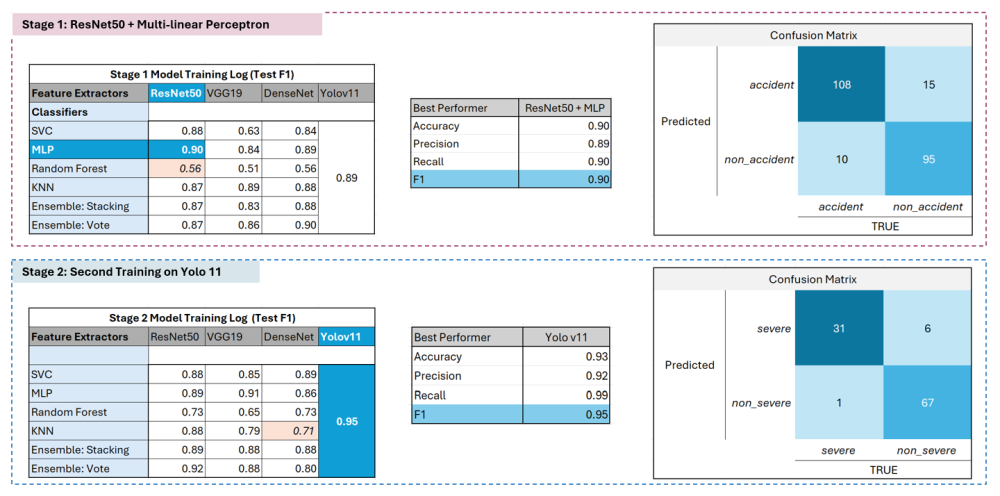

In Stage 1, we used ResNet50 as our feature extractor and a multi-layer perceptron classifier to detect accidents versus non-accidents. The model achieved a high F1 score, as shown in the confusion matrix. Stage 2 focused on assessing accident severity. Using YOLO11 and ensemble learning techniques, the model accurately classified severe versus non-severe incidents. These results highlight the model’s strong accuracy, precision, and recall, demonstrating its ability to distinguish accidents with minimal false positives.

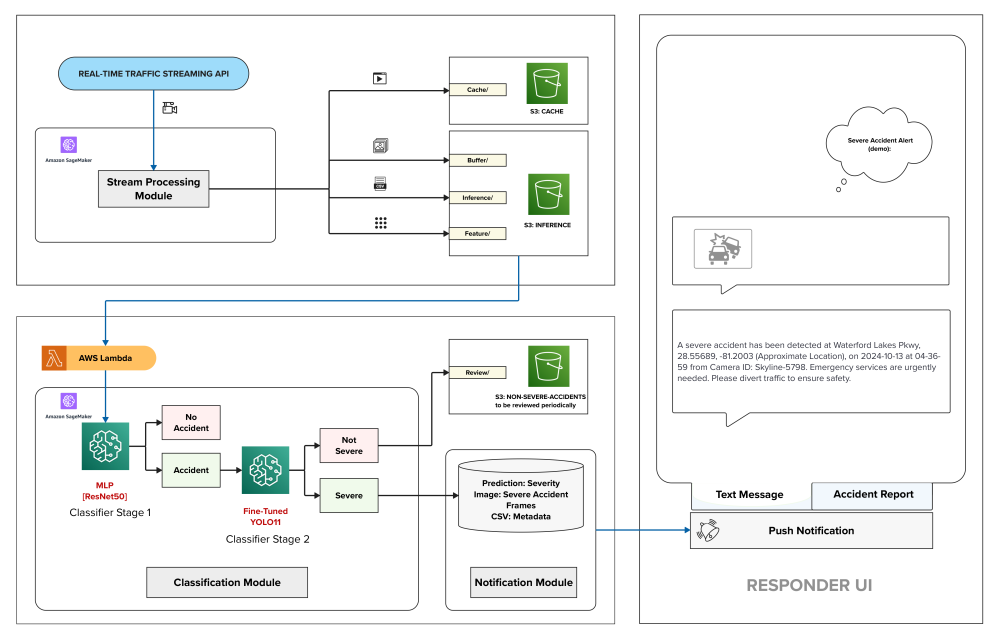

EmergEye: End-to-end Pipeline

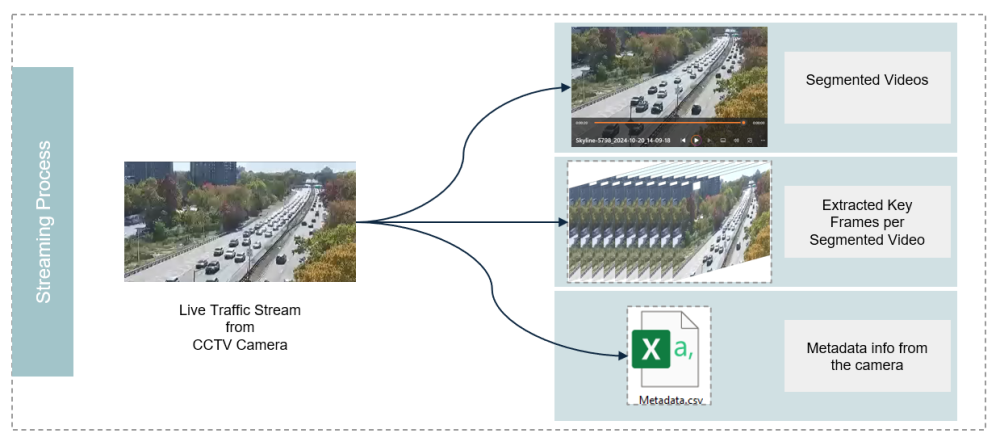

Our data pipeline processes live traffic video streams from CCTV cameras. It segments the footage and extracts key frames for analysis. Each video segment is paired with metadata from the camera, which helps us contextualize the data and optimize detection. This structured data pipeline ensures that we can process, analyze, and respond to incidents quickly, enabling real-time notifications.

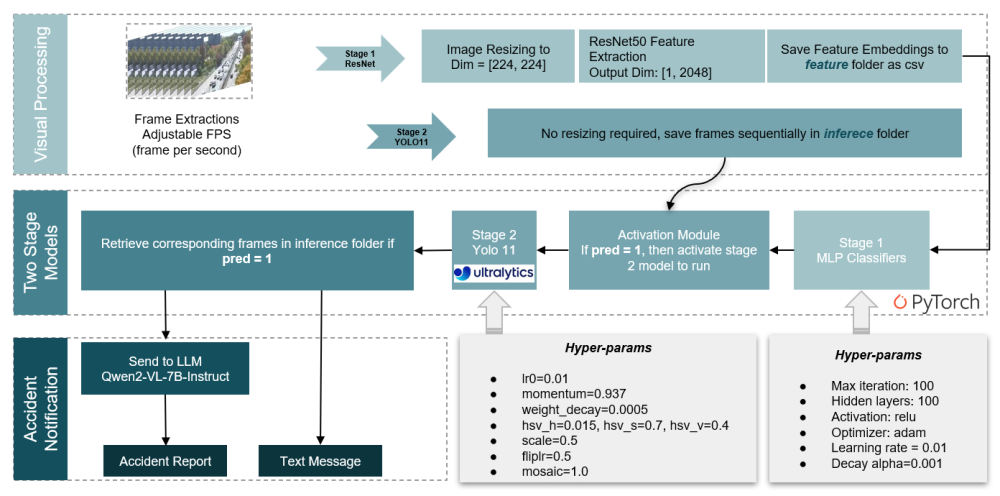

The Detection and Notification Pipeline is the backbone of EmergEye’s system. First, we perform visual processing on the extracted frames, resizing and normalizing the data for efficient analysis. Our two-stage model setup allows us to initially identify potential accidents using ResNet50. If an incident is detected, the pipeline activates YOLO11 for a more detailed classification of severity. Finally, if an accident is confirmed, the system triggers automated notifications, generating an accident report through Qwen2-VL-7B-Instruct and sending a text alert to responders. This streamlined pipeline ensures rapid response when seconds matter most.

Key Features

- Capturing the Action: Segmenting the live stream into 20-second clips for continuous monitoring at the default frame rate.

- Focused Frame Extraction: Selecting key frames at 4 frames per second to ensure optimal analysis – capturing 80 frames per batch.

- Data-Driven Insights: Storing essential metadata in a structured CSV format for easy access and analysis.

- Advanced Feature Embedding: Utilizing ResNet50 to transform 80 frames per video into rich feature embeddings, enabling precise detection and analysis.

- Stage 1 - Initial Classification: Leveraging a Multi-Layer Perceptron (MLP) model powered by ResNet50 features to identify incidents.

- Stage 2 - Severity Analysis: For detected accidents, a fine-tuned YOLOv11 model performs a second-level classification.

- Automatic Notifications: Severe accident detections trigger instant alerts in the Responder Interface.

- Real-Time, Multi-Channel Alerts: Flexible notification options, including push notifications and SMS alerts (future capability upon company registration), to ensure responders receive critical information through multiple channels.

- Detailed Accident Reports: Advanced accident reports generated using Qwen2 Multimodal LLM, delivering comprehensive insights to responders.

- Actionable Information Display: Visual and textual alerts deliver precise accident location, timestamp, and severity level, empowering responders with clear, actionable information for rapid, informed decision-making at the scene.

Performance

Our model is designed to operate with minimal latency, ensuring rapid detection and response even in high-demand environments. Optimized for real-time processing, it delivers near-instantaneous identification of severe accidents (starting from live stream segmentation, it takes about 22 seconds for severe car accident detection from a 20-second segemented video, and additonally 20 seconds from LLM accident report generation, which is scalable depending on computing resources), allowing dispatchers to be alerted without delay. The model's accuracy is bolstered by high-quality labeling practices, which enable it to differentiate accident scenarios effectively and consistently, minimizing false positives. This focus on low latency and precise labeling ensures that our technology performs reliably under real-world conditions, making quick, accurate assessments when every second counts.

Key Learnings & Impact

EmergEye highlighted the potential of AI in addressing real-world challenges. Key learnings include:

- Technical Feasibility: Real-time video processing requires optimized deep learning models and infrastructure to maintain speed and accuracy.

- Broader Applicability: The same model architecture can monitor pet behavior or child/elderly safety, demonstrating flexibility for diverse use cases.

- Critical System Design: A user-friendly interface is essential for real-world adoption by emergency responders.

The impact of EmergEye lies in its ability to enhance public safety, particularly in underserved regions, by significantly reducing the time between accident occurrence and emergency response.

Acknowledgements

Special thanks to the New York State Department of Transportation for granting us access to their CCTV cameras—because EmergEye wouldn’t see much without them! And a big shoutout to a kind lady, who prefers to remain anonymous, for lending her voice to the video—truly the sound of EmergEye! And heartfelt thanks to Korin Reid and Ramesh Sarukkai for their invaluable suggestions and support in shaping our vision.