QuillQuery: Revolutionizing Email Search for Smarter Summaries

Problem & Motivation

The average business user managed around 126 emails daily in 2019—a number that has surged with the rise of remote work. Today, professionals spend nearly 28% of their workday managing emails, often adopting strategies of either clearing out or leaving everything in their inbox. This creates a strong need for more productive email management. Despite the critical role email plays in professional communication, platforms like Gmail and Outlook still rely heavily on rules-based and keyword searches, making it tedious and inefficient to find relevant information.

In a typical business setting, emails flow internally and externally, across numerous threads and from multiple contacts, making it easy for important information to get lost. Our target users need fast and reliable access to critical information in their inboxes to make timely business decisions. QuillQuery aims to bridge this gap, providing a smarter, more efficient way to manage and retrieve essential email content.

Our Mission

We're reimagining email search, giving users the power of the pen in how they sift through their digital correspondence. By harnessing cutting-edge artificial intelligence approaches, we let users pick their favorite language wizard to conjure up lightning-fast results across all their inboxes. From Gmail gardens to Outlook oases, we empower users to craft queries that unlock the secrets of their inbox with consistent ease and elegance.

How to use

To use QuillQuery, users will need to accomplish four key steps. While the basic elements of each step are listed below, more detailed instructions are included in the QuillQuery website. Of note, the QuillQuery tool is currently accessible via a web browser, but future roll outs will offer a plug-in or extension that operates similarly to Rakuten for users to query their emails regardless of the email application.

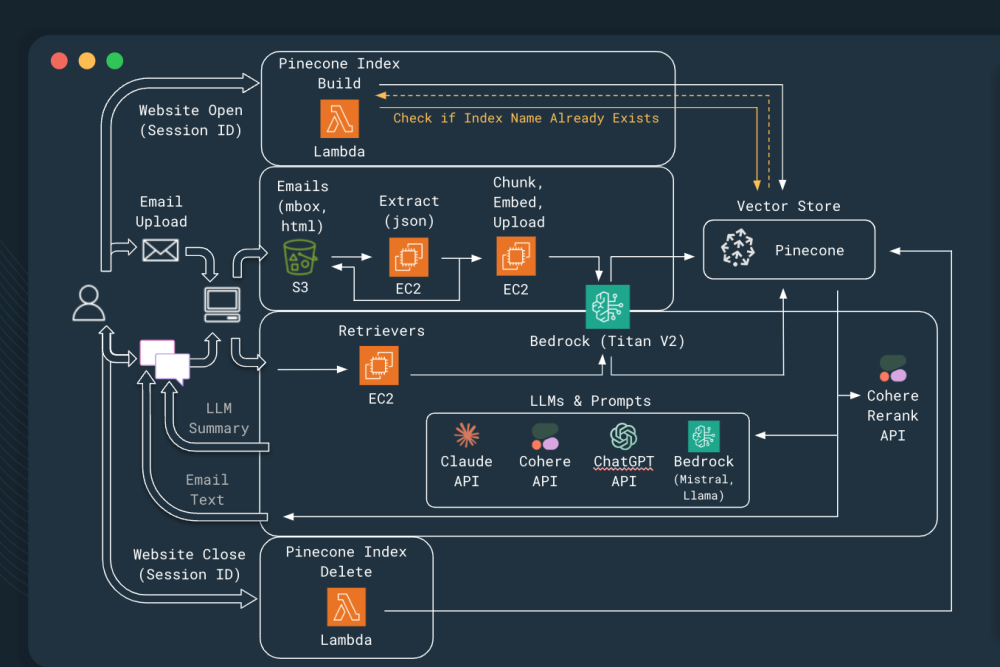

- Login - This is the first step, and creates user-specific, private infrastructure components (i.e. the vector store unique to that user's username).

- Loading Emails - This step creates additional user-specific, private infrastructure components and loads data into that infrastructure (i.e. cleans, chunks, and embeds the email data into their vector store). Currently, users can upload exported Gmail data, but future roll outs will offer API integration to various email applications.

- Querying - This step allows users to enter a conversational question into the query box, select their model of choice based on their performance needs or preference, and get a response. The response includes a summarized answer as well as a list of sources and links to the relevant emails. Future roll outs will have an enhanced sources list that includes a summarization of each of the relevant emails.

- Logout - This step deletes all user-specific infrastructure to maintain user privacy. Of note, future roll outs will offer a paid option by which users can keep their data for quicker set-up and use

Architecture

Data Sources & Data Science Approach

QuillQuery utilizes a traditional Retrieval-Augmented Generation (RAG) to enable seamless, flexible search with the user's preferred Large Language Model (LLM) across email platforms like Gmail and Outlook.

We kicked off our exploration of this tool through user interviews to ensure that our features met user needs and expectations. Through 35 interviews, we validated our assumption on general email use, namely that most users had different email applications for work and personal use. User interviews also highlighted the growing familiarity and comfort with AI-based tools, but their preference for current search returns. From these interviews, we developed a list of key features and returns which provided the foundation of our further data exploration and tool development.

We selected parameters for our RAG model with the Enron dataset, a rich collection of over 500,000 real-world emails from 150 users from Enron employees sent in and around the 2001-2002 timeframe. We used this dataset to enhance the model's ability to generate accurate, contextually relevant results in diverse email scenarios. Of note, given the age of the dataset, the emails only included text as opposed to today’s more advanced emails which include images, charts, etc. As such, this MVP focused only on developing search for text-based emails with future deliveries to include features to support these more advanced email bodies.

Using the Enron dataset, we conducted exploration of common text-based emails. This exploration included analysis of the distribution of word length for email bodies as well as subjects. We also evaluated how many emails were most commonly seen within the To, From, and CC lines. From this analysis, we were able to identify the best starting place for key RAG parameters such as chunk size. We also identified the need to incorporate the subject into the embedded text in order to provide needed context to shorter text bodies.

Prior to training, we applied character-based chunking to split emails by paragraphs, line breaks, or words to retain context. We incorporated this into a standard processing phase for both the training dataset as well as our final dataset: Gmail emails served via an mbox data type.

Following a traditional RAG pipeline, we chunked the email body and merged the subject line with the body text to improve summaries, thereby enhancing the model's accuracy in retrieving and generating relevant responses. These consolidated chunks and metadata were then embedded and stored into a user-unique vector store.

Model Evaluation

We assessed our model through a comprehensive evaluation of multiple pipeline configurations, totaling 192 runs. The pipeline design incorporated a standard embedding layer, a cosine similarity retriever, a shared prompt, and reranking with cross-encoding. Generated responses were compared to gold standard answers for 30 user-specific questions, tested on emails from a selected Enron employee. After narrowing down to four optimal parameter combinations, we conducted additional evaluations using questions and emails from three other Enron employees to finalize the selection. The final selection was tested against 30 questions for a third employee, for each of the five LLM options available to the user.

To measure performance in both the evaluation as well as the test phases, we used a combination of state-of-the-art evaluation metrics and human review. The evaluation included a weighted metric average of BLEU (5%), RAGAS faithfulness (15%), RAGAS answer relevancy (40%), and RAGAS answer correctness (40%). However, in our RAGAS-metric evaluation, we did find results that were not representative of whether the answer was faithful, relevant, and correct. As a result, automated metrics were supplemented with human review of generated answers to ensure quality and accuracy in the final tuning of our model.

Key Learnings & Impact

This project demonstrated that fine-tuning parameters like top_k, context length, and sensitivity is crucial for optimizing response accuracy and relevance. Metadata-based querying, such as filtering by sender or date, enhanced the model’s performance on complex queries, while combining automated metrics with human review ensured responses were both accurate and user-friendly.

The pipeline’s flexibility also makes it adaptable to various platforms, with potential for future integration into web and mobile email applications. User-focused features like attachment search and sensitivity settings boosted usability, paving the way for broader adoption. Overall, this work can greatly improve productivity for users managing large volumes of information, and advances NLP research in retrieval and summarization for context-rich queries.

Future Work

If given additional time and resources, the following are areas of development and testing we would like to invest in following the conclusion of this capstone project.

- Towards Seamless Integration: Our MVP is currently available as a web-based application. To start the enhanced email query experience, users log in and grant email access, with options to load emails via “Drag & Drop” or “Log Into an Account.” Looking ahead, we aim to provide an integrated in-app experience through a plug-in compatible with any web-based or smartphone email app.

- Email quantity sensitivity Adjustment: Introduce a “sensitivity” setting for users, allowing them to increase the top_k parameter and add more context as needed. Higher sensitivity levels will provide deeper retrieval and more context for sensitive queries.

- Enhanced Retriever Performance with Metadata: Improve query handling by enabling the retriever to incorporate metadata fields (e.g., sender, date, subject) for more targeted results. This will allow users to run specific queries like, “Summarize the emails Jeff sent me last Monday on Project Lemonade,” improving the accuracy and relevance of responses.

Acknowledgements

- Ahmeda Cheick, for his expert privacy impact assessment

- Michael Ryan (MichaelR207) for the golden question and answers on HuggingFace for the Enron dataset (click here for data)

- Friends, family, and colleagues who participated in user interviews and as beta testers

- Various authors of public articles to support our development including: Shrinivasan Sankur (click here for article), Joshua Kirby (click here for article), Lore Van Oudenhove (click here for article), Eivind Kjosbakken (click here for article)