Ribbit: A web app for automated frog species identification and classification

Our mission is to bridge the biodiversity data gap by harnessing machine learning for real-time identification of frog and toad calls.

Problem and Motivation

Conserving ecosystems is key to curbing climate change, and maintaining ecosystem services, which are closely linked to over 50% of the world’s GDP. However, selecting which areas to conserve is challenging with the existing data gap, which is biased towards observations in the global north. Increasing the amount of biodiversity data in the global south is critical in the conservation of endangered species, found at high density in biodiversity hotspots in the global south. Machine learning (ML) offers significant potential for automatic in situ biodiversity monitoring, particularly through audio-based identification methods such as passive acoustic monitoring (Global Partnership on Artificial Intelligence, 2022; Tuia et al., 2022; Nieto-Mora et al., 2023).

Our Solution

Amphibians are ideal for acoustic identification due to their diverse vocalizations and are crucial ecosystem indicators (Estes-Zumpf et al., 2022), with over 40% of species at risk of extinction. Ribbit leverages few-show transfer learning for frog call identification and classification, giving nature enthusiasts the ability to easily identify frogs in the wild. Users who opt-in may also upload high-quality data to the Global Biodiversity Information Facility (GBIF) to address data gaps, especially in the global south. Our app is available in four languages: English, Spanish, Portuguese and Arabic, in order to increase the number of users in the Global South.

Data Sources and Approach

We gathered frog and toad calls from three data sources: iNat sounds, Anuraset and Anfibios del Ecuador. We chose these three datasets to have a wide range of calls in our data, in terms of recording equipment and geographic location. The iNat sounds calls are usually recorded by citizen scientists on their mobile phones all over the world, but tends to be biased towards the Global North. The Anuraset calls were recorded on special equipment, by scientists, in the Brazilian Amazon using Passive Acoustic monitoring; both those sets then, contained many calling frogs in a single recording, while the Anfibios del Ecuador dataset was recorded using uni-directional microphones, by scientists, so most calls tended to have fewer calling species. Sadly, we ended up discarding the Anfibios del Ecuador dataset, as there were too few calls per species to train our model.

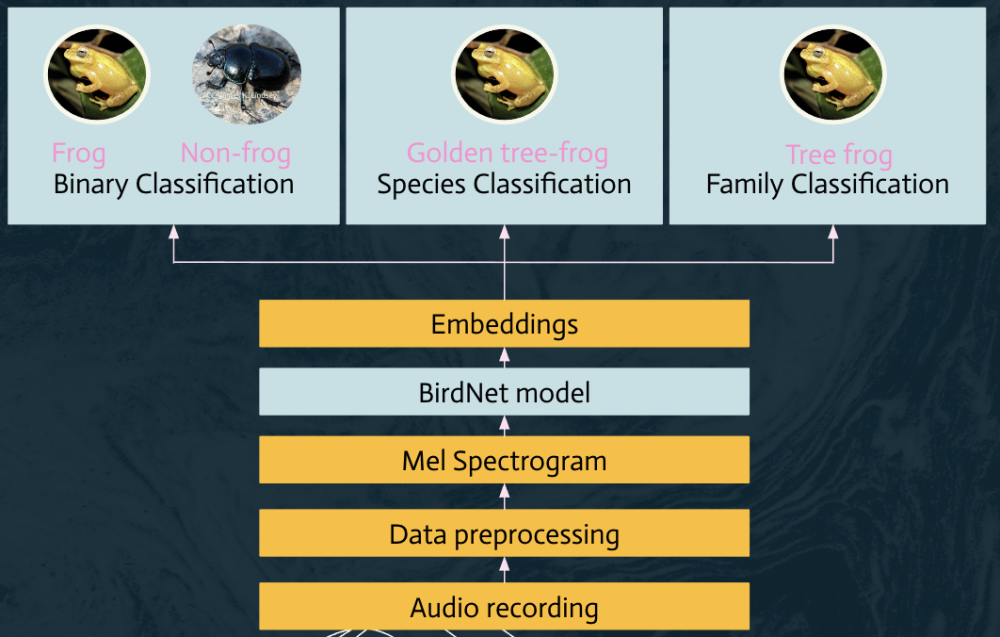

How bird calls allowed us to classify frog species

We leveraged the findings of Ghani et al (2023), who found that global birdsong embeddings extracted using the BirdNet model, a CNN model developed by the Cornell lab of Ornithology and based on the ResNet-18 architecture allowed for few-shot transfer learning, by simply adding a linear probe on top of the extracted embeddings. CNNs are widely use in classification of species, as they can pick up on patterns in the call, such as chirps, or even dialects. We therefore converted our frog calls into images, or spectrograms. Spectrograms are visual representations of frog calls, which hold both temporal and spectral information; the most widely used spectrogram in species classification is called a Mel Spectrogram, which "is commonly used in speech processing and machine learning tasks. It is similar to a spectrogram in that it shows the frequency content of an audio signal over time, but on a different frequency axis." (Source: Huggingface) The difference in the frequency axis is that it only includes ranges that are audible to humans (or in our case, frogs), thus reducing much of the noise. Before converting our data into spectrograms, however, we needed to clean our data. This meant clipping the audio into the desired input for Birdnet (3 seconds by 48kHz), converting the audio to .wav, removing background noise to extract the signal, and normalize the audio. After the data was clean, we converted it to a mel-spectrogram, converted the spectrogram to a single channel, and converted the pixel information into an array. We passed that array through the BirdNet model, extracted the embeddings, and we were then ready for training different models.

Data augmentation

Our final goal was to identify the frogs' species. However, when we performed EDA, we noticed that co-occuring species had highly-correlated calls, which would make the species classification task a difficult one. Subject matter experts coincided that adding a binary layer (frog or non-frog) before the species classification layer tended to improve species classification models. We then generated a non-frog dataset, using iNat sounds again, for species whose frequency differed from the peak frequencies we had found in our frog dataset. That included several species of insects, reptiles and mammals.

Since many of our frogs had very few calls per species, we also decided to perform data augmentation, in order to duplicate the number of calls for our low-sample species. This augmentation was carried out by duplicating the frog calls, but this time with added background noise from another species.

Modeling

Our final goal was to perform few-shot transfer learning (FSL), using as little as 35 calls per species, as suggested by Ghani et al, 2023, which we used as our baseline model, where they only split the data into train and test, and performed no hyperparameter tuning. However, we also wanted to evaluate whether using a "vanilla" FFNN would improve our metrics, seeing as we could fine-tune our model, permuting taxonomic level, type of model (FSL/Vanilla), feature engineering, data source and type of embedding.

Evaluation

Since most of our data came from passive acoustic monitoring, where multiple species are calling, using top-1 accuracy did not make a lot of sense, which is why we decided to follow iNat sounds top-5 accuracy approach. Our best-performing model was the FSL multiclass species model, for 71 species.

Key Learnings and Impact

- Contrary to other subject matter experts experience, adding a binary layer did not improve the performance of our model. A visual PCA analysis of our frog and non-frog embeddings revealed that clustering was not optimal, as many of the frog calls from the iNat sound dataset clustered together with non-frog calls from the same dataset. While we selected the non-frog augmentation based on spectral characteristics (peak frequency), we may have inadvertently included some of the sounds found in those species calls to our augmentation of frog calls.

- We also noticed a very pronounced clustering in our embeddings, based on dataset. Other subject matter experts concurred in this experience, so that recording apparatus makes an impact in terms of the embeddings generated. However, we saw no difference in the predictions made by our best-performing model, in terms of dataset

- At the beginning of the project, we sent out surveys to determine the need and interest for our data. We obtained 77 responses (44 in Spanish, 27 English, 16 Portuguese) and 45 left contact information for beta testing. 95% said the would be willing to share their frog recordings to global biodiversity datasets.

- We noticed that our model was able to correctly identify up to four simultaneously calling species within a single call of the Anuraset dataset, which had strong labels (i.e. more than one species label per call).

Acknowledgements

The Ribbit team consulted several subject matter experts during the development of our app. We'd like to thank:

Dr. J. Nicolás Urbina - Pontifica Universidad Javeriana, Colombia: for their insights regarding the monitoring of anuran (frogs and toads) species in Colombia.

Dr. Santiago Ron - Museo de Zoología, Pontificia Universidad Católica del Ecuador: for allowing us to use their recordings of Ecuadorian frogs and toads in our model training.

Dr. Juan Sebastián Ulloa - Instituto Humboldt, Colombia: for sharing their knowledge of AI and biodiversity conservation, and pointing us towards the BirdNet model.

Juan Sebastián Cañas - for giving us insights on the Anuraset dataset.

Dr. Jodi Rowley - FrogID, Australia: for sharing their knowledge on citizen science apps and validation of frog calls by experts .

Dr. Stefan Kahl - Cornell Lab of Ornithology: for giving us insights into how to incorporate the BirdNet model into our app.

Dr. Matthew McKown - Conservation Metrics: for discussing embeddings with us, and giving us ideas on how to extend Ribbit moving forward.

We'd also like to thank our instructors, Zona Kostic and Morgan Ames and all our beta testers for their valuable feedback.

References

- Boston Consulting Group (2022) - How AI Can Be a Powerful Tool in the Fight Against Climate Change

- Cañas et al. (2023) - A dataset for benchmarking Neotropical anuran calls identification in passive acoustic monitoring

- Estes-Zumpf et al. 2022 - Improving sustainability of long-term amphibian monitoring: The value of collaboration and community science for indicator species management

- Ghani et al. (2023) - Global birdsong embeddings enable superior transfer learning for bioacoustic classification

- Kahl et al. (2021). BirdNET: A deep learning solution for avian diversity monitoring

- Jepson & Ladle (2015) - Nature apps: Waiting for the revolution

- Napier et al. (2024) - Advancements in preprocessing, detection and classification techniques for ecoacoustic data: A comprehensive review for large-scale Passive Acoustic Monitoring

- Nieto-Mora et al. (2023) - Systematic review of machine learning methods applied to ecoacoustics and soundscape monitoring

- Rowley et al. (2018) - FrogID: Citizen Scientists Provide Validated Biodiversity Data on Frogs of Australia

- Silvestro et al. (2022) - Improving biodiversity protection through artificial intelligence

- The Global Partnership on Artificial Intelligence (2022) - Biodiversity and Artificial Intelligence - Opportunities and Recommendations for Action

- Tuia et al. (2022) - Perspectives in machine learning for wildlife conservation