SynthCall

What is Function Calling?

Function calling enables large language models (LLMs) to execute precise, user-defined functions, enhancing accuracy, automating repetitive tasks, and reducing reliance on costly infrastructure like large vector databases. By offloading complex logic or arithmetic tasks to predefined functions, it generates accurate responses and minimizes the risk of errors common in traditional LLM-generated outputs. This approach streamlines operations and improves the practicality of LLMs in real-world applications.

Problem & Motivation

Current solutions for AI- driven tasks such as retrieval-augmented generation (RAG) struggle with logical operations and immediate information retrieval. Conventional fine-tuning approaches require human-generated data which can be expensive to obtain. Thus, our team chose to turn to function calling with synthetic data to explore viable alternatives to expensive and complex models.

Synthetic data is projected to account for 60% of all data used in AI, and presents a significant alternative to human-generated data, while improving accuracy and efficiency. By fine-tuning smaller, more efficient models and developing a low-cost synthetic data pipeline, the project aims to enhance model performance for businesses to implement into their workflow.

Function calling enables precise execution of user-defined logic, allowing large language models to handle tasks that require structured and accurate outputs. This capability, combined with synthetic data generation, reduces the reliance on expensive vector databases and streamlines the automation of repetitive tasks. By focusing on the development of scalable and cost-effective solutions, our team aims to address critical gaps in AI workflows, such as maintaining data integrity, ensuring model transparency, and enhancing operational efficiency.

Furthermore, this approach not only optimizes AI performance but also reduces the overhead associated with training and maintaining large, parameter-intensive models.

Data Source

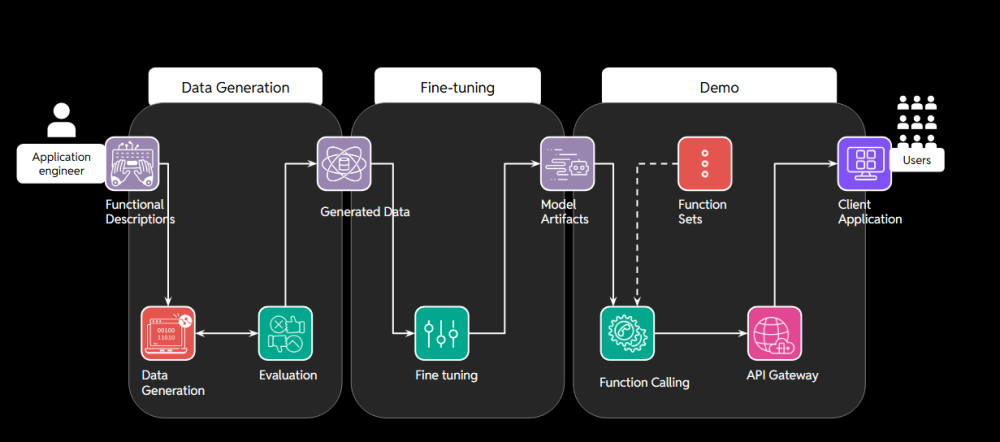

The data generation pipeline in our project is designed to create high-quality, synthetic data for function-calling tasks. At its core, the pipeline utilizes Ollama, an on-premises solution, to run the Llama 3.1 8b instruct model. This approach prioritizes data security by keeping all model interactions within the user’s environment, effectively safeguarding sensitive information from external exposure. The choice of Llama 3.1 8b strikes a balance between performance and resource efficiency, though the modular nature of our system allows for the use of cloud models if preferred.

Our pipeline begins with carefully crafted function descriptions, complete with clear docstrings and type hints, which serve as the foundation for data generation. We leverage LangChain with its ChatOllama library to ensure consistent, structured output in JSON format which is crucial for downstream processing. The generation process involves iterative prompt refinement, where we assess small batches of generated data against qualitative criteria such as argument correctness and query relevance. This iterative approach allows us to fine-tune our prompts, ensuring the generated data aligns closely with the original function specifications. Throughout development, we experimented with various function types, from simple arithmetic operations to more complex data manipulation tasks, continuously refining our approach to handle diverse function structures and requirements.

Methodology

After completion of the data generation pipeline, we implemented instruct fine-tuning on several free-open source models to ensure better implementation of function calling and to produce more accurate results. This fine-tuning step included a text input of a proposed question as the input and the ideal JSON-structured output as the instructed answer. Fine-tuning applied to several different models including Llama 3.1 and Llama 3.2. We varied the models with respect to the number of total parameters in the model (1B, 7B) however, we did not attempt to fine-tune the largest publicly available models due to cost constraints (70B, 405 B). We also trained up Mistral models as a point of comparison, though our focus was mostly on the Llama models.

Evaluation

The evaluation process is structured to reduce the need for extensive human involvement by implementing automated quality metrics. This approach allows us to quantify the relevance of the generated data. Based on the metric, low-quality generation pairs are automatically filtered out, ensuring that only high-quality data are kept. This improves efficiency and enhances the reliability of the data used for model fine-tuning.

The evaluation process incorporates a reverse query generation technique and here is an example. First, a generated pair provides information about the query and argument information. We can then apply this information to call the actual function in the backend to retrieve a return. Next, a response will be generated based on the function call results. With this response, a LLM (large language model) can generates n possible query sets. To assess the alignment, we calculate the mean cosine similarity between the original and reversely generated queries, and using this similarity score as a threshold to filter out low-quality pairs. This method ensures only the most accurate and relevant data is retained for further processing. During the evaluation pipeline, we used Llama 3.1 70b as the generation model.

Key Learnings & Impact

Acknowledgements

First and foremost, we thank our Capstone advisors Kira Wetzel and Fred Nugen, who have given our team knowledge and valuable feedback. We also thank our stakeholders, industry experts Eric and Starr from Microsoft and Dynata respectively, and our friends and family for supporting us through our final steps in the MIDS program.

Thank you!