Windfallen

Toward robotic harvesting to reduce US food waste.

Thirty eight percent of all food in the US is wasted–this equates to 145 billion meals.1

The US wastes enough food to feed every person on food stamps for the entire year three times over.

A substantial amount of this waste happens at the farm level--a study by Santa Clara University found that field losses of edible produce are approximately 33.7% of marketed yield.2 Windfallen is a team of researchers passionate about reinforcement learning, robotics, agriculture and applying emergent technology to hard problems, such as reducing food waste at the farm level.

We are reimagining agriculture through the lens of robotics.

Advancements in robotic technology are primed to overhaul the agriculture industry, where ~30-40% of farm operating costs come from labor. Employing laborers to harvest produce poses numerous challenges–one such challenge is that increasing labor costs are shrinking margins for farmers, even making the harvest and sale of certain lower-margin produce uneconomical.3 On a ripe apple tree, a fall breeze or a hot day can cause a large proportion of fruit to prematurely fall from the branches. In the past, these ‘windfall’ fruits were collected and sold at a lower margin for processed foodstuffs like cider and applesauce. Nowadays, more and more apples are left to rot on the ground because increasing labor costs do not justify their collection.

The collection of windfall apples, and by extension other windfall fruit, is a promising area for robotic deployment to reduce food waste while not threatening workers’ livelihood. However, successful robotic deployment is a notoriously difficult task requiring multiple layers of hardware and software technology. Our team focuses on the specific layer of robot orchestration in an agricultural setting. We have simulated the operation of a multi-robot fleet that collaborates to collect windfall fruit through multi-agent reinforcement learning (MARL).

How do you get robots to work together toward a common goal?

As industrial automation continues, more robots will be deployed to carry out tasks. As this occurs, the problem of robotic orchestration is becoming increasingly important. In other words, how do you get robots to work together to achieve their goals? The burgeoning field of multi-agent reinforcement learning (MARL) offers a promising machine-learning based approach to this problem. As the field of robotics approaches its “GPT moment”, the demand for MARL technology in real-world contexts is likely to grow.4

Our Research

Multi–agent reinforcement learning (MARL) has been an active field of research for over a decade. However, research pushing the field toward real-world use cases is less common. Our research provides a first look at the potential of MARL in an agricultural setting. We focus on the use case of harvesting windfall apples and expect this technology to be easily transferable to other crops like oranges or walnuts.

Research in this field has largely been conducted in simple, 2D environments that minimally represent real-world circumstances. InstaDeep’s Jumanji research library, for example, offers 22 such environments.5 We provide a novel environment developed based on field measurements of real apple orchards. This environment randomly generates an orchard with real-world dimensions where agents are trained to carry out the task of collecting windfall apples. In conducting this research, we achieve the first step toward deploying a robotic fleet to collect windfall fruit and provide valuable insight for the research community on the viability of MARL in real world settings.

Data Science Approach

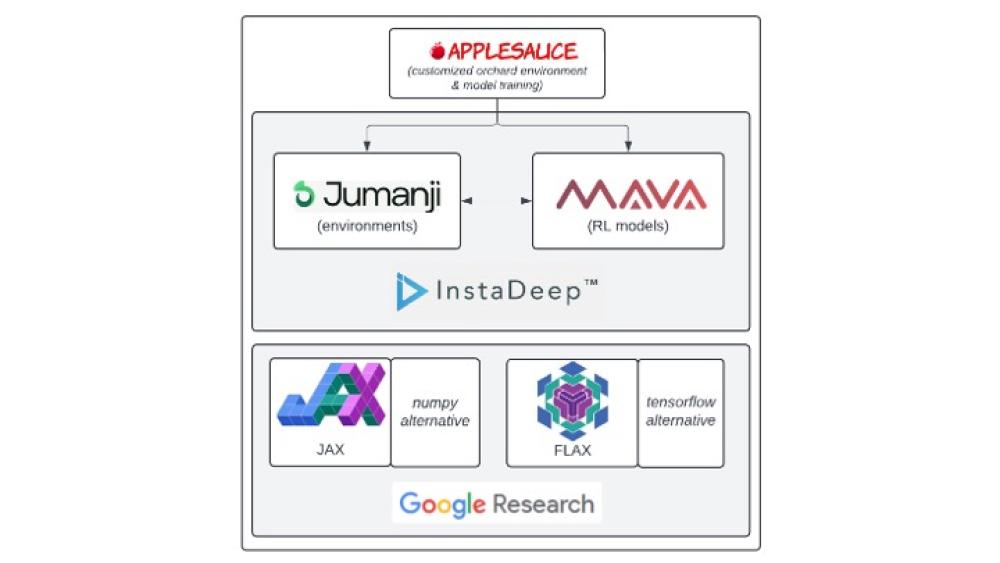

A MARL implementation of this use case, fondly referred to as “Applesauce”, was adapted from the Jumanji and Mava research libraries.5, 6 Both libraries are research tools developed by InstaDeep to speed the rate of MARL research. Because training MARL algorithms requires millions of training steps at a minimum, parallelization is key to efficient research and development. Mava, Jumanji, and our “Applesauce” implementation are all built using Google’s Jax and Flax python libraries, which enable parallel computation with minimal code changes.

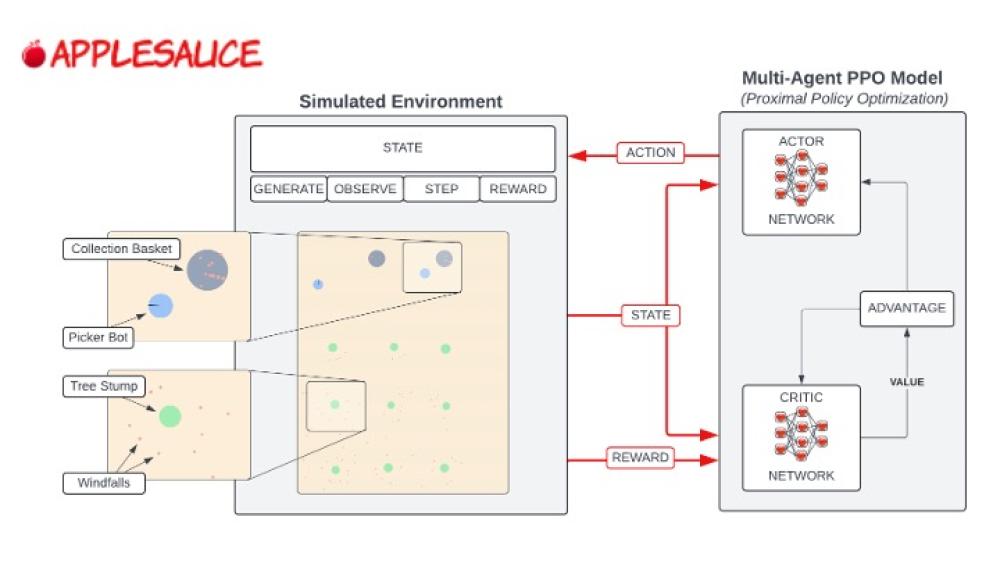

Environment

At the core of our research is a novel environment for testing assumptions for the robotic harvesting use case. To this end, our team leveraged field measurements across ten apple orchards near Santa Cruz, CA to inform realistic dimensions of orchards as well as their tree density. Applesauce’s infrastructure randomly generates orchards with different dimensions so that a variety of orchard environments are included in the training and evaluation processes.

A bird's eye view illustrates how different two apple orchards can be. Some are orderly in consistent rows, while others have trees of different ages in disorganized layouts. Our simulated environment accounts for both types of orchards and all possible combinations in between.

In addition to a more realistic layout, we improve upon currently available environments by:

- Establishing agents with a much larger action space: Agents can rotate to almost any angle, enabling more targeted movements across the orchard. This is a step beyond Jumanji’s Level-Based Foraging environment, which limits agents’ range of motion to forward, backward, left or right.

- Implementing a continuous environment space: Whereas other research environments use a discrete environment space (sometimes referred to as a grid world), the Applesauce environment allows agents to navigate to any point in continuous 2D space. This results in a larger set of possible environment states and more sparse positive rewards, making this a much larger and more challenging training task.

Training Approach

At a high level, reinforcement learning trains a model to interface with an environment. At each time step, the model receives information on the state (or observation) of the environment as well as its reward based on previous actions, and outputs a next action to take with the goal of maximizing its rewards. The Applesauce infrastructure follows this concept, using multi-agent proximal policy optimization (MAPPO) for training.

Training Architecture: The Multi-Agent Proximal Policy Optimization (MAPPO) training architecture is well suited for cooperative tasks such as ours.7 Proximal policy optimization controls the size of policy updates, increasing the likelihood that the model converges which is not always the case for MARL. MAPPO further incorporates a centralized value function for calculating the Generalized Advantage Estimation (GAE) of all agents’ actions, resulting in more collaborative behavior overall. Further information on MAPPO and other forms of proximal policy optimization can be found at MarlLib or HuggingFace.

Reward Function: Critical to any reinforcement learning model is a well-thought out reward function, lest your agents fool you by finding a loophole! Through qualitative observation and fine tuning, we developed a complex set of positive and negative rewards:

- Positive rewards for: picking up an apple, dropping an apple in the bin

- Negative rewards for: out of bounds, taking a step, improper attempt to pick up, improper attempt to drop, dropping an apple not in bin, no action (noop), colliding, hoarding

The environment and trained model are rendered through a 2D visual representation, and an example of model inference can be seen in the video at the side bar.

Evaluation

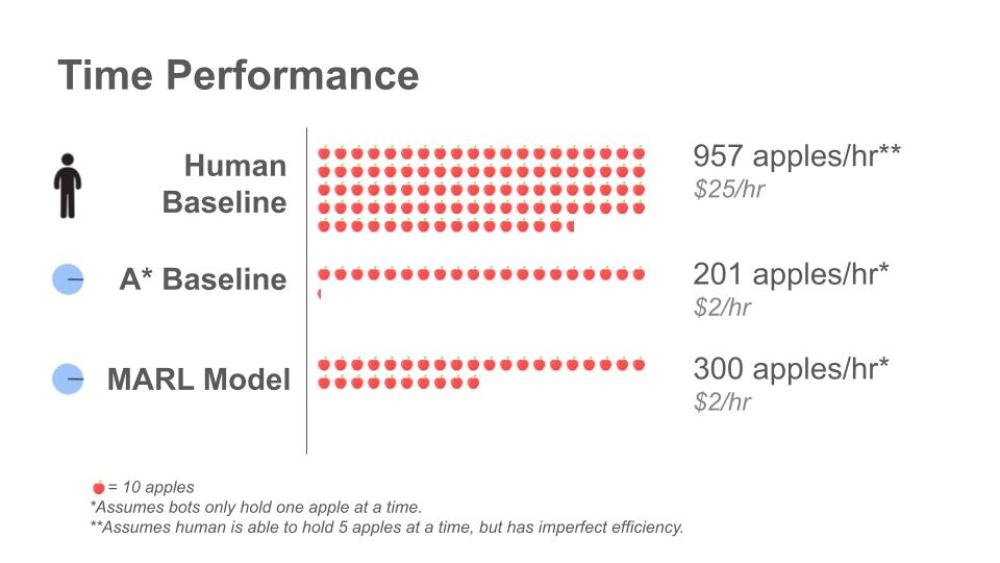

We measure the performance of MARL against two different baselines. The first is an algorithmic A* baseline which directs agents to the nearest apple from the agent. The second, more interesting, is the human baseline. Would it be possible for these agents (or robots in the physical world) to outperform humans?

Our primary evaluation metric is the amount of apples collected per hour. We use best practices for statistically significant evaluation based on the principles documented by Gorsane, et al.8

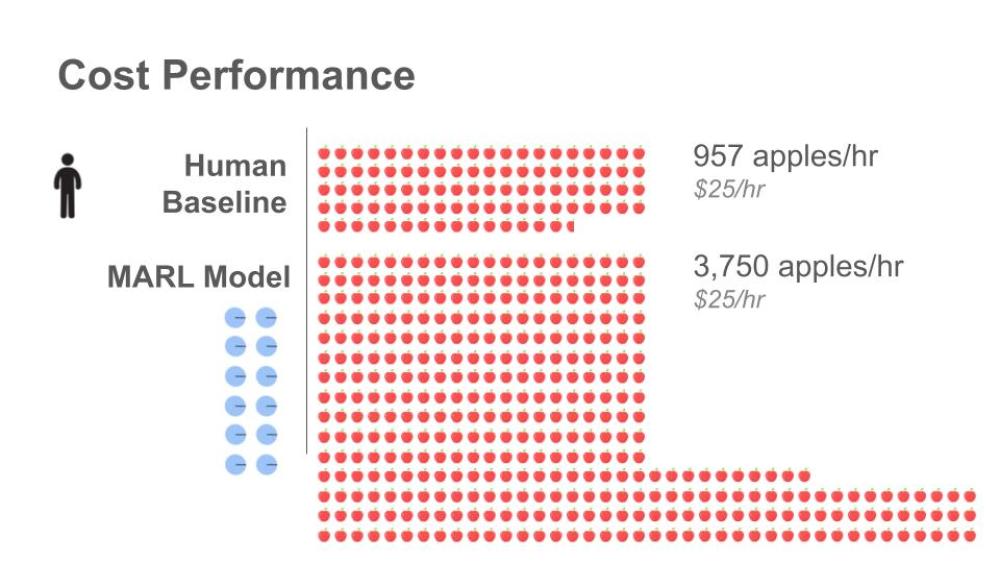

The MARL algorithm beat out the algorithmic (A*) baseline, but humans are still expected to be faster at this early stage. Despite a human’s time efficient performance, certain benefits of robotic deployment mean this result is still promising. Namely, the cost per hour and the amount of hours that a human or robot can be operational. A robot is ‘always on’ and can pick windfall apples as soon as they fall, increasing farmers’ yield of usable windfall apples. Furthermore, the low cost of deploying a robot for a single hour means that twelve robots could be deployed for the same cost as a single human worker. Taking the cost factor into account, our robotic fleet solution outperforms the human by a factor of four--collecting 3750 apples per hour to the human's 957.

The expected increase in produce yield coupled with the affordability of robotic deployment make us confident that these results indicate a strong upside for farmers to deploy a robotic fleet. Further, it is possible to deploy robots that carry more than one apple at once, a feature which would close the gap between human and robotic time performance.

Key Learnings & Impact

This project provided an excellent opportunity to connect experts in the field of agriculture with those in MARL research. Our guiding light has been to improve our agricultural systems through robotic deployment, and we were excited to learn how open the agricultural community is to adopting these technologies! Our initial research provided a first pass at assessing the potential of robotic deployment for windfall apples, and can serve as a benchmark for future agriculture focused use cases within the MARL research community.

Future Work

The Windfallen team intends to publish research in 2025 after adding a few additional components to our system. Namely, we will incorporate moveable bins to reduce the travel time for picker agents and incorporate fog of war to simulate agents being dropped in a new, unmapped space. Beyond these features, future work could move toward adding a time-series component for short term memory, advancing to a 3D environment, and tuning communication methods between agents.

Acknowledgements

We extend our sincere gratitude to our Capstone instructors, Joyce Chen and Korin Reid. Their continuous guidance was integral to maximizing the impact of our work.

We are also indebted to the developers of the Mava library, who generously shared their time and expertise on constructing an environment for accelerated training in Mava. Thank you to Ruan de Kock, Sasha Abramowitz, Omayma Mahjoub, and Weim Khlifi from InstaDeep.

Finally, we would like to thank the farmers who opened their orchards to us. Interviews with local farmers in Watsonville, CA enabled us to tailor our research to focus on their most pressing needs and to conduct research that more closely resembles a real-world scenario.

References

- Feeding America. Food Waste Statistics in the US. Available at: https://www.feedingamerica.org/our-work/reduce-food-waste#:~:text=Food%20waste%20statistics%20in%20the,billion%20worth%20of%20food%20annually

- Gregory A. Baker, Leslie C. Gray, Michael J. Harwood, Travis J. Osland, Jean Baptiste C. Tooley. On-farm food loss in northern and central California: Results of field survey measurements. Resources, Conservation and Recycling. 2019. Available at: https://doi.org/10.1016/j.resconrec.2019.03.022

- Linda Calvin, Philip Martin, and Skyler Simnitt. Adjusting to Higher Labor Costs in Selected U.S. Fresh Fruit and Vegetable Industries. USDA Economic Research Service. 2022. Available at: https://www.ers.usda.gov/webdocs/publications/104218/eib-235.pdf?v=9273.5

- Y Combinator. Requests for Startups. Summer 2024. Available at: https://www.ycombinator.com/rfs

- Clément Bonnet, Daniel Luo, Donal Byrne, Shikha Surana, Sasha Abramowitz, Paul Duckworth, Vincent Coyette, Laurence I. Midgley, Elshadai Tegegn, Tristan Kalloniatis, Omayma Mahjoub, Matthew Macfarlane, Andries P. Smit, Nathan Grinsztajn, Raphael Boige, Cemlyn N. Waters, Mohamed A. Mimouni, Ulrich A. Mbou Sob, Ruan de Kock, Siddarth Singh, Daniel Furelos-Blanco, Victor Le, Arnu Pretorius, Alexandre Laterre. “Jumanji: a Diverse Suite of Scalable Reinforcement Learning Environments in JAX”. ICLR 2024. Available: https://arxiv.org/abs/2306.09884

- Ruan de Kock, Omayma Mahjoub, Sasha Abramowitz, Wiem Khlifi, Callum Rhys Tilbury, Claude Formanek, Andries Smit, Arnu Pretorius. “Mava: a research library for distributed multi-agent reinforcement learning in JAX”. 2023. Available: https://arxiv.org/abs/2107.01460

- Chao Yu, Akash Velu, Eugene Vinitsky, Jiaxuan Gao, Yu Wang, Alexandre Bayen, Yi Wu. The Surprising Effectiveness of PPO in Cooperative, Multi-Agent Games. NeurIPS 2022. Available at: https://arxiv.org/abs/2103.01955

- Rihab Gorsane, Omayma Mahjoub, Ruan de Kock, Roland Dubb, Siddarth Singh, Arnu Pretorius. “Towards a Standardised Performance Evaluation Protocol for Cooperative MARL”. 36th Conference on Neural Information Processing Systems (NeurIPS 2022). Available: https://arxiv.org/abs/2209.10485