WriteAssist

Problem and Motivation

WriteAssist aims to tackle two significant challenges in grades 6-12 writing education: teacher burnout and inadequate individualized feedback for students. Teachers face overwhelming workloads, low pay, and lack of appreciation, leading to high levels of stress and dissatisfaction. This crisis in the teaching profession affects both teacher retention and the quality of education. On the other hand, students often do not receive the personalized feedback necessary to improve their writing skills, resulting in disengagement and missed growth opportunities. Addressing these issues is crucial for retaining teachers, enhancing student academic performance, and promoting education equity, especially in public schools with large class sizes and limited resources.

Our Product

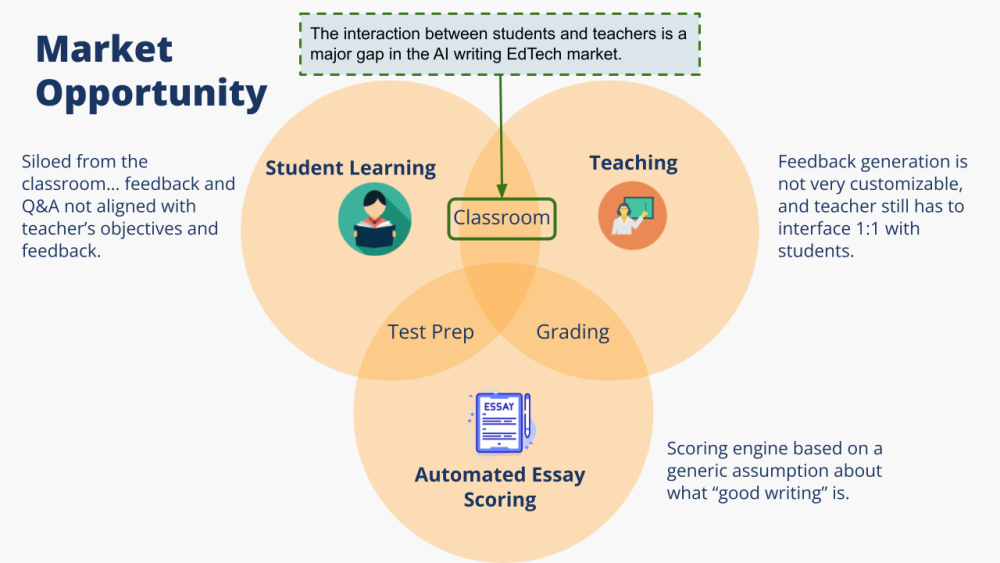

WriteAssist is a cutting edge large language model GenAI system designed to tackle challenges in writing education mentioned above, with a classroom aligned AI assistant. This innovative tool automates personalized feedback and offers 1:1 student counseling, enhancing both teacher efficiency and student writing skills. By leveraging GenAI, WriteAssist aims to reduce teacher burnout and dissatisfaction while improving the quality of individualized student feedback. Teachers can upload rubrics, deliver detailed feedback, and use AI to generate responses that reflect their feedback style, ensuring students receive timely and actionable guidance. Students can engage in 1:1 conferencing with teachers and receive personalized support, leading to a higher quality of education for all. Existing solutions in the marketplace typically cater only to teachers, and apply their own proprietary feedback models, frustrating teachers who need more tailored solutions. Unlike existing solutions, WriteAssist serves both teachers and students. It allows extensive customization based on class documents, onboarding Q&A, and feedback samples. Teachers can upload rubrics, deliver detailed feedback, and use our AI to generate responses that mirror their feedback style, ensuring students receive timely and actionable feedback. The market for WriteAssist is substantial, with over 49 million students and more than 4 million teachers in the U.S. The U.S. education technology market is valued at approximately $80 billion and is expected to grow at a compound annual growth rate of over 11%. If WriteAssist is adopted by even 10% of U.S. schools, it could reach around 4.9 million students and 400,000 teachers. We surveyed 5 teachers and 11 students for their thoughts on the WriteAssist concept. Teachers emphasized the time consuming nature of grading and providing individualized feedback. They see significant potential for AI to automate parts of this process. Our student survey respondents expressed that they would appreciate an AI tool like WriteAssist. Overall, our unique approach streamlines teacher workloads, improves the quality of student feedback, and promotes education equity especially in underfunded public schools where student to teacher ratios are high.

Data Source

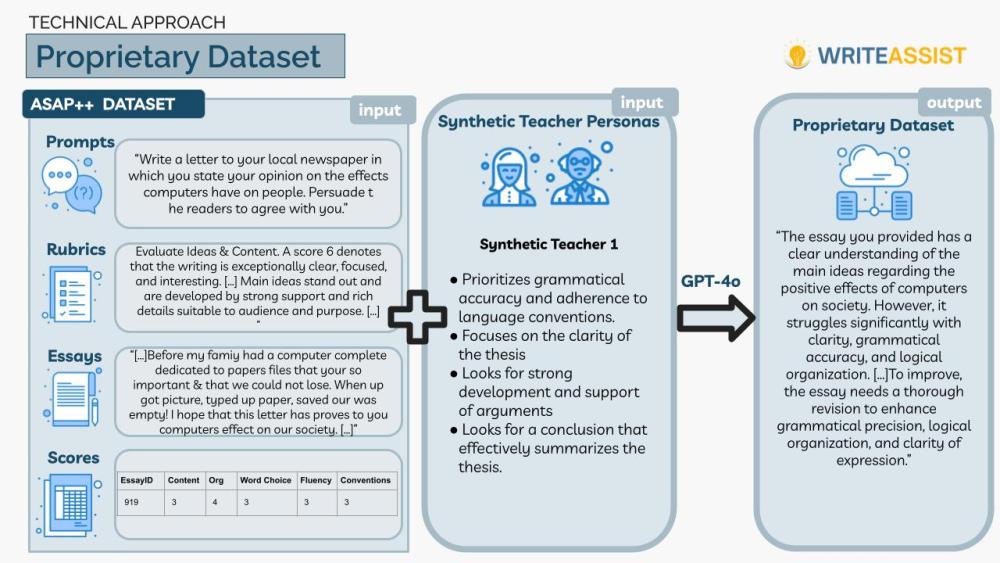

- The data sources for this project include publicly available datasets such as the ASAP Dataset (Automated Student Assessment Prize) and ASAP++. These datasets provide a wealth of information, including essay texts, prompts, teacher rated numeric scores, and rubric criteria:

- Synthetic Teacher Personas: Using the ASAP++ dataset, we created 5 synthetic distinct teacher personas, their goals, and teaching style.

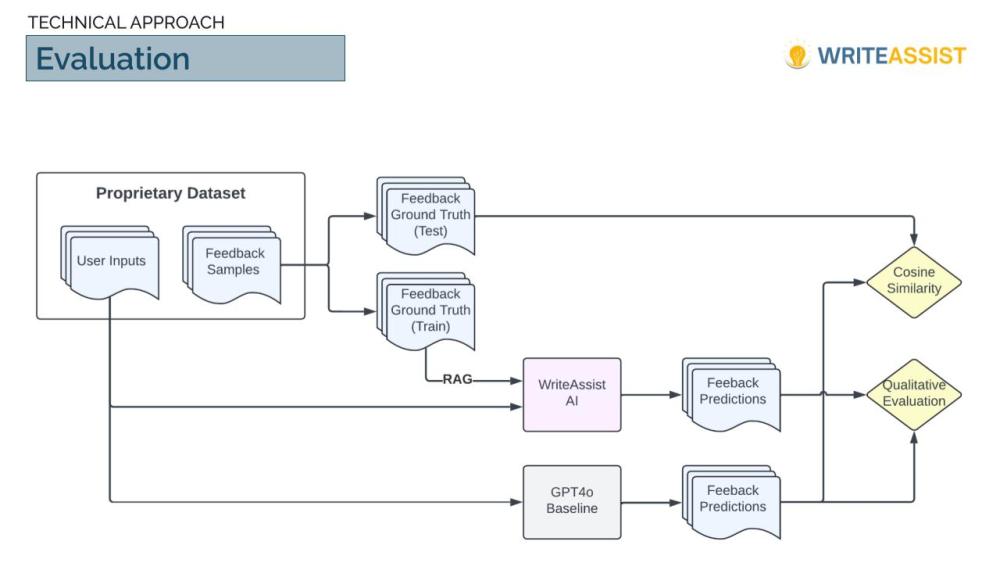

- Proprietary Dataset: We needed a written feedback dataset to train and test our models. Therefore, we augmented the ASAP++ numeric dataset with our synthetic teacher personas and passed all of this to GPT4o to generate a proprietary dataset for our task, to create ground truth written feedback.

Additionally, educational websites like CommonLit, ReadWriteThink, Khan Academy, Purdue OWL, Writing Forward, and NoRedInk offer valuable content for training the AI model on writing concepts suitable for grades 6-12 students.

Data Science Approach

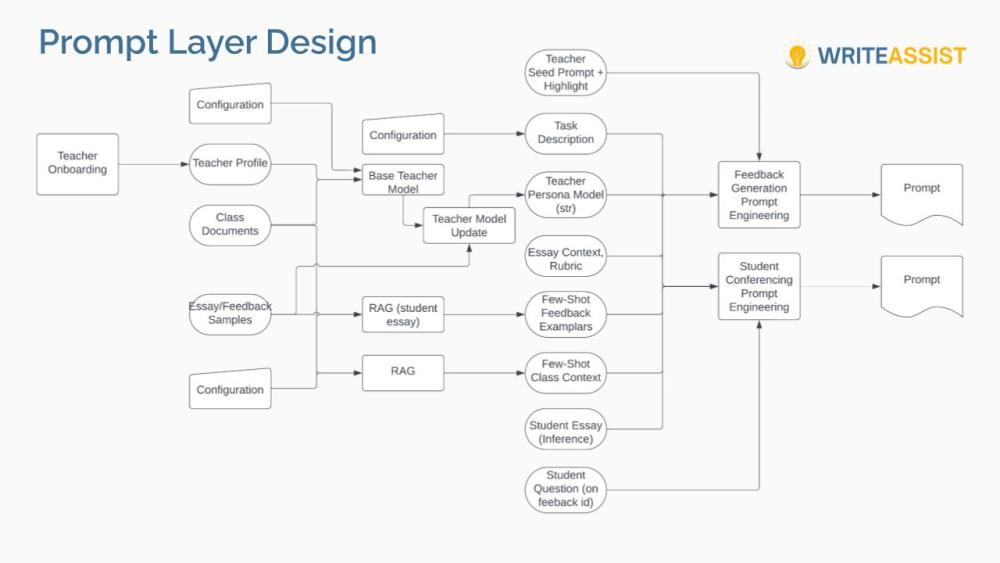

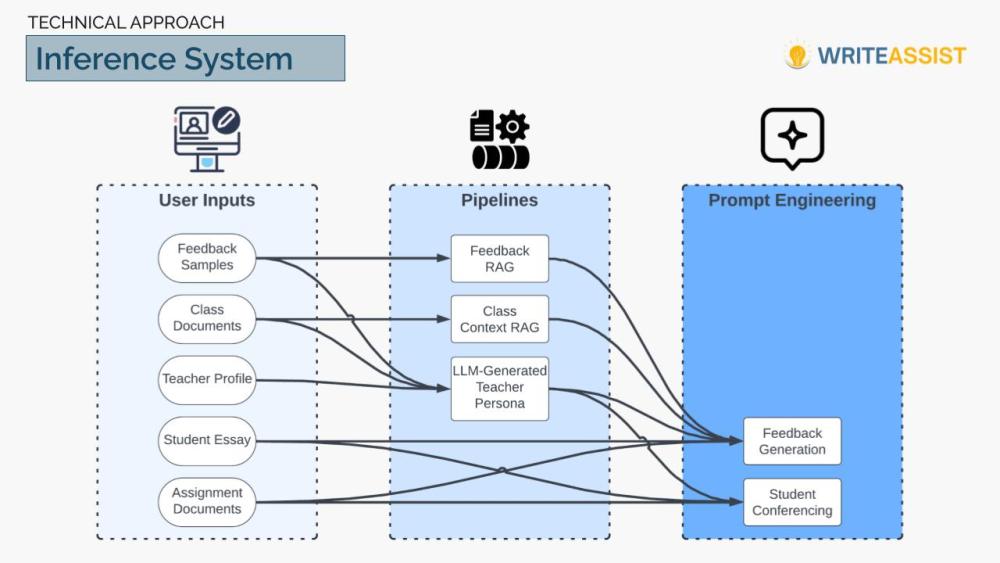

We developed a comprehensive experimentation system for WriteAssist, designed to evaluate how modifying various components impacted overall system performance. Our approach began with fine tuning a pre-trained LLM on writing specific datasets to refine its ability to provide meaningful feedback on student essays. We employed RAG to integrate relevant context, including student essays, rubrics, and feedback, into the model’s responses based on user queries. We also used prompt engineering to create inputs that guided the LLM in generating precise and useful outputs, tailored to both feedback generation and student conferencing.

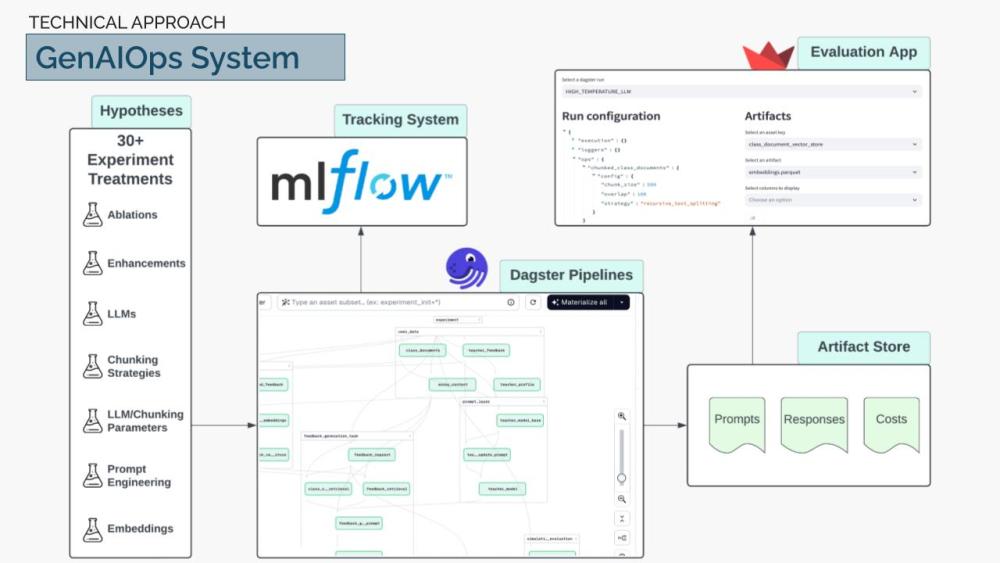

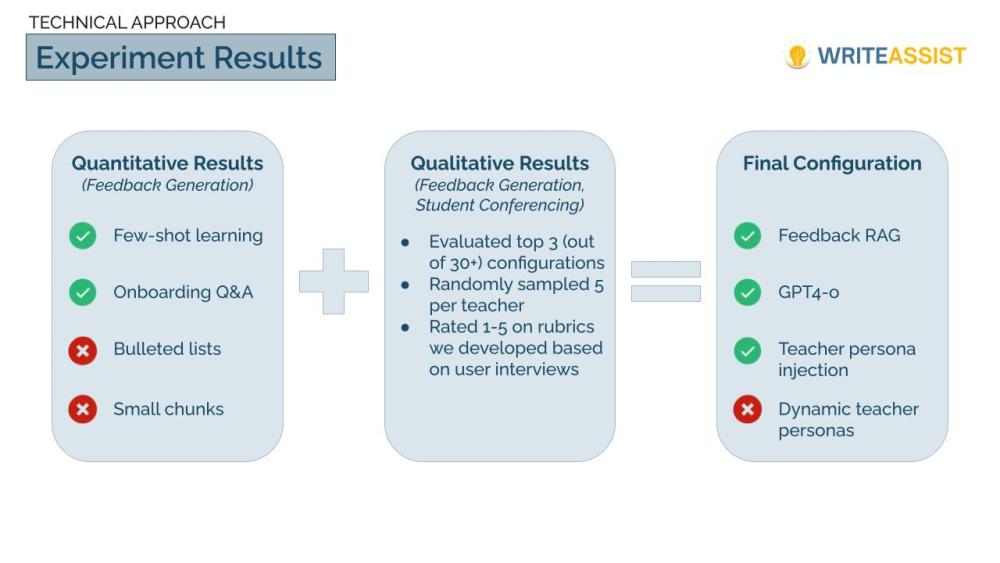

Our experimentation process involved testing different LLMs, prompt methods, and configurations to identify the most effective setup. This included assessing the impact of few shot learning and teacher onboarding Q&A, and comparing various output formats. We utilized GenAIOps, incorporating tools like Dagster, MLFlow, and Streamlit, to ensure thorough traceability, explainability, and tracking of our AI experiments, crucial in administering experimental treatments to our end to end GenAI system. By logging critical artifacts, we were able to explore these in a separate UI app to understand cost drivers, provide further explainability on LLMs and RAG pipelines, and conduct qualitative performance assessments. Through this system, we tested over 30 hypotheses to determine the optimal configuration for WriteAssist.

Evaluation

We evaluated our system using both quantitative and qualitative methods. Quantitative evaluation involved measuring cosine similarity to assess how closely LLM predictions matched teacher feedback. For qualitative assessment, we rated samples from top model configurations using a rubric developed from user interviews, scoring them on a scale of 1-5. We conducted real world and simulated testing by engaging with educational contacts and running simulations with student and teacher profiles, ensuring robust validation of system performance. Our findings highlighted the importance of few shot learning and teacher onboarding Q&A for achieving optimal performance. After comparing our results to a GPT4 baseline, we determined that WriteAssist outperformed the baseline. We employed a traditional randomized train/test split for our synthetic feedback samples, using the train set for few shot learning in our RAG pipeline and reserving the test set for evaluation.

Key Learnings and Impact

Our project yielded several key learnings: We developed teacher AI models for 1:1 student conferencing and constructed an end to end GenAIOps concept. By combining elements of machine learning theory, MLOps, and GenAI, we created a GenAIOps framework that optimizes system components in a scalable, transparent, and cost efficient manner.

WriteAssist highlights the transformative potential of GenAI in addressing critical challenges in writing education. Our MVP, demonstrated a dual interface system for teachers and students, enabling personalized feedback and continuous AI learning to enhance the feedback loop. By leveraging GenAI, we portrayed the capability to tackle teacher burnout while enhancing individualized student feedback. The project differentiated itself by addressing both teacher and student needs simultaneously, using fine tuned LLMs, RAG, and prompt engineering to provide tailored, effective feedback.

The market opportunity for WriteAssist is substantial in the U.S., particularly in public schools with high student-to-teacher ratios. With 49 million public school students and over 4 million teachers, WriteAssist has the potential to significantly impact middle and high school classrooms in overcrowded and under-resourced environments, revolutionizing the educational landscape. By reducing teacher grading hours, increasing job satisfaction, and improving student writing proficiency, WriteAssist enhances student engagement and academic growth through personalized, meaningful feedback.