Carbon AIQ

Empowering individuals and organizations with transformative carbon offset data that drives actionable insights and fosters sustainability.

What is Carbon Offset?

A carbon offset is a way to reduce greenhouse gas emissions by investing in projects that lower or remove CO2 and other greenhouse gases from the atmosphere.

There are two types of carbon offset markets: compliance and voluntary. Compliance markets are government-regulated, setting emission caps for specific industries. Voluntary markets are open to anyone wanting to offset their carbon footprint. Our product focuses on the emerging but rapidly expanding voluntary market, which has grown five-fold in three years and is expected to triple by 2030.

Problem and Motivation

The voluntary market faces credibility issues due to fragmented and inaccessible data spread across various registries and formats. This hinders thorough investigations and monitoring by researchers, regulators, journalists, and consumers, undermining the integrity of the growing carbon offsets industry.

The Berkeley Carbon Trading Project (BCTP), an initiative by the Center of Environmental Public Policy (CEPP), introduced the Voluntary Registry Offset Database (VROD), but managing this data manually in Excel is time-consuming and error-prone.

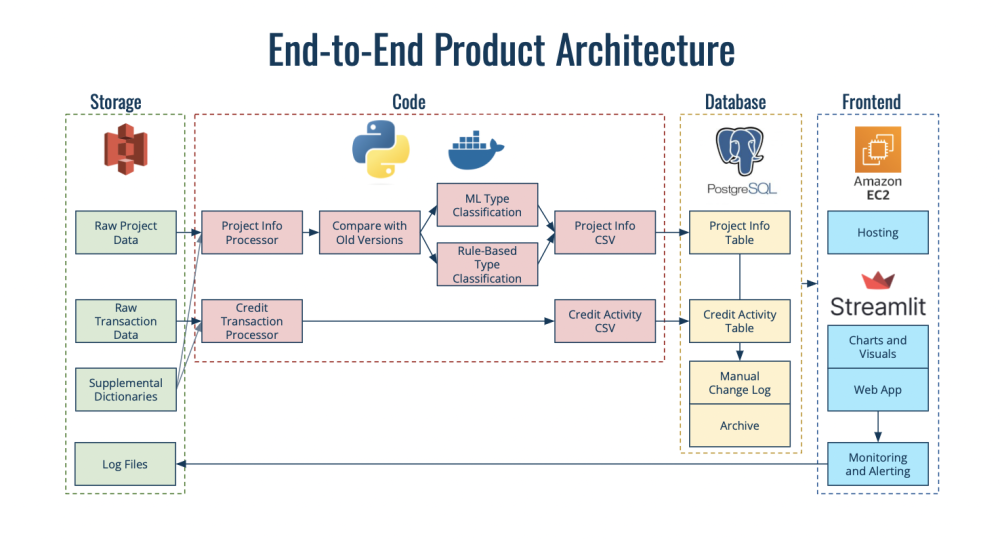

Carbon AIQ addresses these issues by integrating data from all six registries into two databases for project details and credit activities. It systematically classifies projects into different types, facilitating global identification of specific projects like cookstove or wind projects. It also translates VROD Excel visuals into an intuitive interface, enabling easy exploration and analysis of carbon offset data.

By leveraging cloud technology, NLP, and advanced data engineering, Carbon AIQ tracks project information and the lifecycle of carbon credits, reducing manual effort and providing invaluable insights. This empowers the creation of impactful environmental solutions, advancing the fight against climate change.

Data Source

Our data includes raw data downloaded from six voluntary registries; the American Carbon Registry, Climate Action Reserve, Gold Standard, Verified Carbon Standard, the California Air and Resources Board or ARB, and the Washington Department of Ecology. It contains data on roughly 10,000 carbon offset projects, classified into 78 Project Types and 110k Credit transaction data, useful for a project-type classification problem, carbon credit lifecycle and other potential research inquiries. The links to the registries can be found below.

- American Carbon Registry- https://acrcarbon.org/

- Climate Action Reserve- https://www.climateactionreserve.org/

- Gold Standard - https://www.goldstandard.org/

- Verified Carbon Standard - https://registry.verra.org/

- California Air and Resources Board or ARB - https://ww2.arb.ca.gov/our-work/programs/compliance-offset-program

- Washington Department of Ecology. - https://ecology.wa.gov/Air-Climate/Climate-Commitment-Act/Cap-and-invest/Offsets

Methodology

Data Pipeline

Our inputs are raw .csvs and Excel files downloaded from each offset project registry and uploaded into S3. A registry has separate files for projects and credit activities such as credit issuances, cancellations, and retirements. Our data pipeline processes these files into cleaned data with common fields for individual projects and credit transactions. This preprocessed data from the data pipeline consists of 40 features such as Project ID, Project name, Voluntary Registry, Project Type, Methodology, time series data on carbon credit activities, etc.; 9,087 projects and 78 Project Types.

The objective is to classify the projects according to the right Project Types.

Rule-based Text Classification

Our BCTP partners employ a rule-based system manually via Excel lookup functions. This checks whether certain fields equal or contain certain strings based on a rules document to output a project-type. We error-proofed and automated this system, cutting hours of rule-checking on 9K projects to a 5-minute run. Nearly 5.2K projects, or 57%, were covered by this system, tackling class imbalance with full coverage on 14 classes with less than 50 projects from our EDA

While the automated rule-based system worked well for the researchers, it had significant limitations

- Nearly 4K projects were classified by the human eye without even Excel, this was not only labour-intensive but also prone to errors and needed iterations of reviews

- As the number of rules increased, so did the risks of rule collisions and project-type misclassifications

- Additionally, the rules themselves required constant evolution due to ongoing changes in methodologies.

This is where ML/AI plays a vital role in automated classification.

ML/AI Text Classification

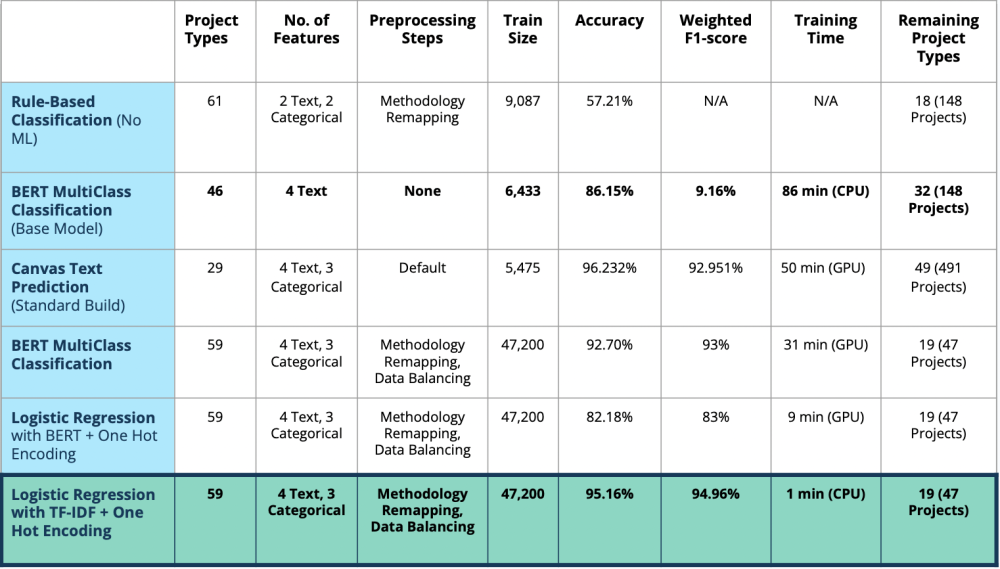

The label Project Type, was highly imbalanced, with the largest class having 1,400 records and the smallest just 1, thus for ML/AI we selected F1 score, Precision, Recall and Accuracy as our evaluation metrics.

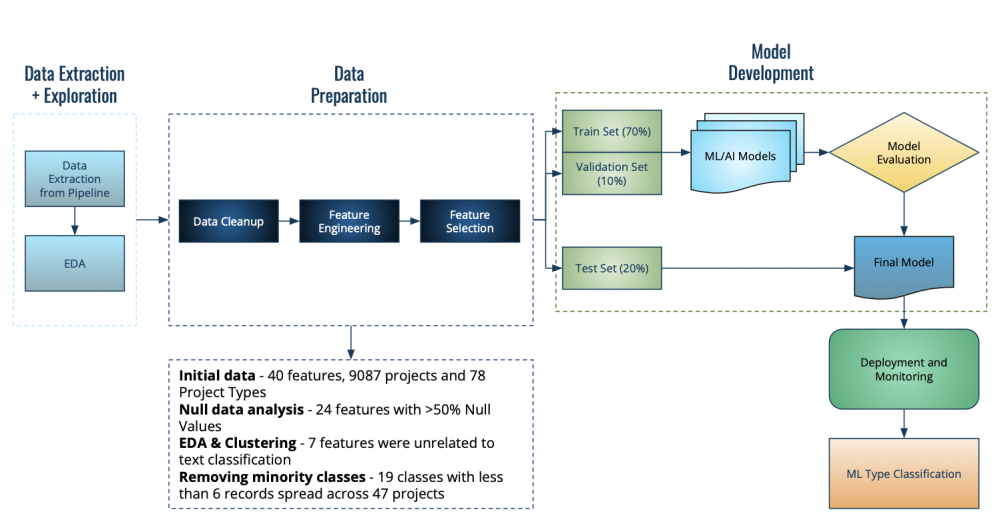

For the extreme multiclass classification ML/AI problem, we employed:

A data preparation layer involving initial exploration, feature engineering, and feature selection. This processed data was split into training, validation, and test sets in 70:10:20 ratios.

The model development layer involved the data balancing using undersampling of the majority classes and oversampling of minority classes, followed by experimentation with various text classification large language models and machine learning algorithms.

Finally, the deployment layer involved evaluating the best-performing model on a blind test set before deploying it back into our data pipeline for further processing.

Evaluation

Our evaluation process involves testing and validating the best model using blind test data. Among all the models, the Logistic Regression model with TF-IDF vectorization on text features and one-hot encodings on categorical features gave us the best results. It achieved an accuracy and weighted F1 score of 95%. Additionally, this model was the lightest to compute, with a training time of under a minute.

Our root cause error analysis identified three main reasons for the misclassifications:

- Low training data for minority classes (as few as 4 records)

- Semantic similarities between project types (e.g., Solar Lightings vs. Lightings)

- Keyword variations used in project names and methodologies such as Bio and Methane led to incorrect predictions between Biodigester and Methane Digester.

These errors are being further analyzed by BCTP to validate if similar project types can be combined.

In conclusion, by combining the strengths of automated rule-based systems and ML/AI predictions, we want to empower our users to focus on what matters most: enabling action and driving sustainability.

Key Learnings & Impact

We believe that the power of data is present in this data product. We can provide stakeholders, green energy warriors, researchers, and policy providers an avenue to gain access to all data, in a single common operating language, with impactful visuals for near-immediate use. Data is difficult and can be laborious to develop end-state products when in silos. With CarbonAIQ, and an automated and connected data pipeline, the BCTP, and now Carbon AIQ have made easier access and carbon offset project knowledge possible. Our largest learning point was that complex harmonization can be automated with a balance of human-developed rules as well as AI. When imbalanced data is present, rule-based algorithms can be leveraged for higher fidelity. Trust in jargon is key, and through advanced AI experimentation, we could identify where humans would need to be in the loop. However, by automating human logic, a more complete dataset can be created.

Our Contribution

- Automated Data Pipeline: We reduced over 150 hours of manual deployment to just 30 hours for BCTP by implementing an automated data pipeline.

- Efficient and Accurate Classification: We achieved precise classification of 69 project types using a combination of rule-based methods and ML/AI techniques.

- High Efficiency: We deployed a machine learning model that is both fast and optimized for low-compute environments, ensuring high efficiency.

- Enhanced User Interface: Carbon AIQ transformed over 20 Excel-based charts into interactive, web-based dashboards, delivering an enhanced user experience.

- Ready-to-Deploy Product: Carbon AIQ is fully deployable on BCTP's platform, serving its 1,400 users, and is scalable to handle 100 times more projects and 10 times more users.

Acknowledgements

We give our thanks to our Capstone instructors, Joyce Shen and Korin Reid, who provided us with valuable feedback and recommendations throughout the length of our capstone project development.

Furthermore, a thank you to our stakeholders, Dr. Barbara Haya and Aline Abayo, from the Berkeley Carbon Trading Project (BCTP). Dr. Haya created the BCTP Carbon Offset database to support researchers in providing a harmonized carbon offset database in Excel. Through her initial work and her continued guidance, the team was able to complete the translation and enhancement of this tool into our capstone data product. They have also provided us with a 1,400-person user base, which we strived to complete a user-friendly, intuitive, and trustworthy data product for.