SynapsePatents: AI-Driven Multi-Modal Retrieval-Augmented Generation for Advanced Patent Analytics

Problem and Motivation

The growing complexity and sheer volume of patent documents present a substantial challenge for users seeking to extract relevant information efficiently. Patent texts are often lengthy, filled with technical and legal jargon, and organized in dense formats, making it difficult for both experts and non-experts to quickly understand its key aspects. Traditional methods of information retrieval, including manual searches and keyword-based queries, are labour-intensive and inefficient, leading to significant time and cost expenditures.

Additionally, the financial risks associated with patent-related issues are considerable. Developing new technologies or products without the awareness of existing patents can lead to costly legal disputes and infringement lawsuits, which may result in substantial legal fees, fines, and potential damages. Companies may also incur significant development costs if their innovations overlap with patented technologies, leading to wasted R&D investments and missed market opportunities.

Our Mission

Accelerate Global Innovation with Advanced Patent Analytics

Our solution will revolutionize how individuals and businesses interact with patent information. By providing precise, contextually relevant answers to natural language queries, the system will democratize access to complex patent data, reducing the time and effort required to navigate dense documents. This will enable more effective and efficient innovation by allowing inventors and researchers to quickly ascertain whether their ideas are novel, thereby mitigating the risk of costly patent infringement and promoting a more informed approach to product development.

Market Analysis

The market for patent analytics and information retrieval solutions is substantial and growing, driven by increasing patent filings and the need for efficient intellectual property management. The global patent analytics market size was valued at USD 1.00 billion in 2023. The market is projected to grow from USD 1.12 billion in 2024 to USD 3.02 billion by 2032, exhibiting a CAGR of 13.2% during the forecast period (Source). This growth is fueled by the rising complexity of patent portfolios and the increasing need for businesses to navigate the competitive landscape of intellectual property. With industries ranging from pharmaceuticals to technology investing heavily in R&D, the demand for advanced QA systems that streamline patent information retrieval presents a significant opportunity for market expansion.

Data Source

We have used the Google Patents Open Source Dataset, a publicly available and comprehensive collection of patent information curated by Google. It is a vast resource containing approximately 3.7 million patents filed in the United States over the past decade, amounting to a total data size of around 1.2 terabytes. Due to the dataset's immense scale, analyzing it in its entirety with the given resources was impractical. To ensure a focused and impactful study, we selected a targeted subset from the dataset, concentrating on the high-volume Cooperative Patent Classification (CPC) section G06. This section encompasses patents related to computing, calculating, and data processing, areas that are pivotal to modern technological advancements, including artificial intelligence, machine learning, and data management. We analyzed patents within CPC section G06 granted between January 2022 and December 2023. This subset, comprising approximately 170,000 patents, provided a manageable and highly relevant dataset for uncovering recent trends in innovation within the computing and data processing domains.

Methodology

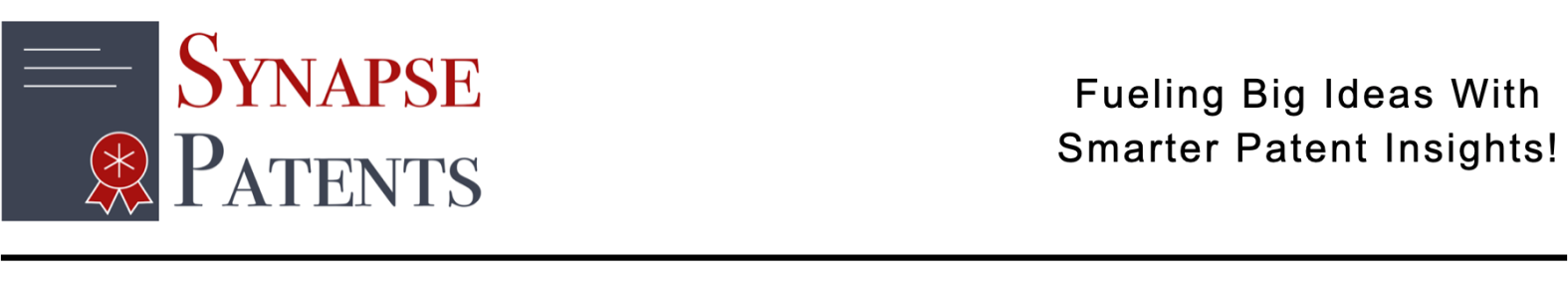

Data Pre-processing

The data pre-processing pipeline begins with patent metadata stored in Google BigQuery. This data undergoes several transformations:

- GCS Storage: The patent metadata is extracted and stored in Google Cloud Storage using the Parquet file format, which is optimized for efficient data storage and retrieval.

- Data Loading: The Parquet files are loaded into the system for further processing.

- Text Processing: The loaded data is processed to extract summary text, equations, and tables from the patent documents.

- Data Chunking: The processed information is then divided into smaller, manageable chunks to facilitate efficient processing and retrieval.

- Embedding Generation: Using Google's Bigbird model, the chunked data is converted into embeddings. These embeddings are 4096-dimensional vector representations of the text, capturing semantic meaning.

- Vector Storage: The generated embeddings are stored in a vector database (Qdrant) for quick similarity searches.

User Interaction and Query Processing

The second part of the diagram shows how user queries are processed:

- User Interface: Users interact with the system through a SynapsePatents Chatbot.

- Query Processing: User queries are passed to the Mistral AI system, which performs two key functions:

- Keyword extraction and action determination

- Formulation of database retrieval strategy

- Vector DB Retrieval: Based on the Mistral AI output, a Vector DB Retriever searches the Qdrant database using cosine similarity ranking to find relevant patent information.

- Document Retrieval: The system retrieves three types of information:

- Keywords and titles (40% of retrieved documents)

- Chunked text (60% of retrieved documents)

- Complete metadata (100% of retrieved documents)

- Response Generation: The retrieved documents, along with an instruction prompt and the original user query, are fed back into the Mistral AI system. This generates a final response, which is then presented to the user.

- Feedback Loop: The system includes a feedback mechanism, allowing the user's interaction to potentially influence future queries and responses.

Model Evaluation

To create an evaluation dataset, we addressed the lack of available ground truth data by generating it using existing patent search platforms based on keyword-based retrieval. We selected a random sample of 150 patents from our dataset and extracted the abstract of each patent. Using ChatGPT, we generated keywords from these abstracts to form search queries. Next, we used the generated keywords to search for similar patents on LENS.org, a patent search platform. The results were then reviewed, and the publication numbers of the retrieved patents were exported as ground truth data for evaluation.

The final metric that was chosen was a weighted combination of four key components (RAGAs metrics) used to evaluate the quality and effectiveness of our ChatBOT.

Context Precision (15% weight): This measures how accurately the AI uses the provided context in its answer. A higher precision indicates that the information used is highly relevant and accurate.

Context Recall (35% weight): This component has the highest weight in the formula, emphasizing the importance of comprehensive answers. It measures how much of the relevant information from the given context is included in the response.

Faithfulness (25% weight): This evaluates how well the AI's answer aligns with the provided information, ensuring that the response doesn't contradict or misrepresent the given context.

Answer Relevancy (25% weight): This assesses how well the AI's response addresses the specific query or task at hand.

The weighting in this metric prioritizes comprehensive answers, as evidenced by the higher weight (35%) assigned to Context Recall. This emphasis encourages AI systems to provide thorough responses that cover a wide range of relevant information from the given context. While comprehensiveness is prioritized, the metric maintains a balance by also considering precision, faithfulness, and relevancy. This ensures that answers are not only comprehensive but also accurate, faithful to the provided information, and directly addressing the query.

In our evaluation, we focused on Mistral 7B Instruct and Llama 3.1 8B Instruct as potential candidates. We assessed both models using the final weighted metric, examining their performance in 4-bit and 8-bit quantization versions. After a comprehensive analysis, the Mistral 7B Instruct model with 8-bit quantization emerged as the superior choice, outperforming the other variants and demonstrating the best overall capabilities for our intended application.

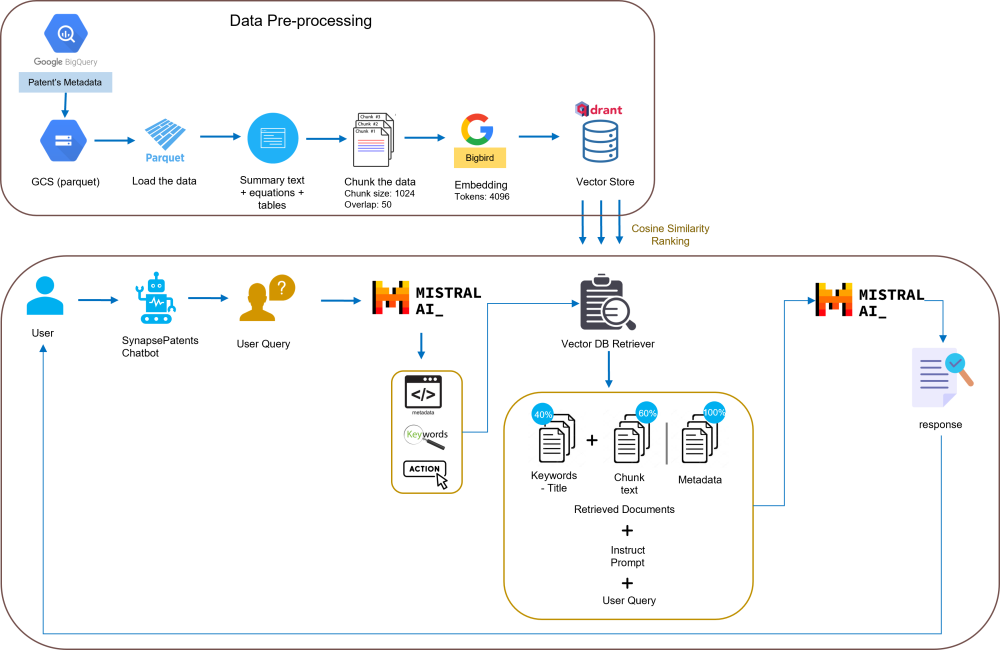

Deployment

The system is accessible via a web interface at synapsepatents.com, utilizing an Angular-based ChatBOT User Interface. Traffic is managed through AWS Route 53 and Elastic Load Balancing, with security handled by AWS Certificate Manager. A FastAPI application server acts as the core backend, containerized using Docker. It interfaces with a vector store for data retrieval and connects to Mistral 7B Instruct, a large language model, through AWS SageMaker Model Endpoint, exposed via a AWS API Gateway and AWS Lambda for server-less compute. AWS Cognito handles user authentication. This design emphasizes scalability, security, and efficient request handling through its microservices-based approach and AWS-native services integration.

Key Challenges and Learnings

Deploying a machine learning model in AWS presented challenges in selecting the right services (e.g., SageMaker, Lambda, EC2) for an optimal inference architecture while balancing scalability, integration needs, and cost efficiency. Managing compute constraints and avoiding escalating costs required careful optimization of resources. Key learnings included conducting thorough patent IP searches to assess novelty and avoid infringements, mastering generative AI techniques for creative applications, and successfully hosting a scalable web application with a custom domain using AWS services such as Route 53 and EKS.

Acknowledgements

We would like to express our sincere gratitude to our instructors, Puya Vahabi and Kira Wetzel, for their insightful guidance and encouragement throughout this journey. We are also deeply thankful to Neal Cohen (Patent Attorney, UCSF, CA) and Jay Chesavage (Freelance Patent Search Professional, CA), the subject matter experts, for their expertise and valuable support in helping us understand the patent search process and rejections.

References

- Chen, W., Hu, H., Chen, X., Verga, P., & Cohen, W. W. (2022). MuRAG: Multimodal retrieval-augmented generator for open question answering over images and text.

- Aleksandra Piktus, Fabio Petroni, Vladimir Karpukhin, et. al., "Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks", Facebook AI Research; University College London; New York University.

- Damian Gil. "Advanced Retriever Techniques to Improve Your RAGs"

- Llama Team, AI @ Meta. The Llama 3 Herd of Models.

- Jiang, H., Xu, C., Liang, P., & Xiao, C. (2023). Mistral 7B: A 7-billion-parameter language model for efficient and high-performance natural language processing. arXiv preprint arXiv:2310.06825

- Adithya S K. (2023, October 9). Deploy Mistral/Llama 7b on AWS in 10 mins. Medium.